这篇文章写得挺不错的,就是篇幅长,内容多,而且平时没有解过这方面的bug,感觉理解的并不是特别透彻

VSync信号的科普我们上一篇已经介绍过了,这篇我们要分析在SurfaceFlinger中的作用。(愈发觉得做笔记对自己记忆模块巩固有很多帮助,整理文章不一定是用来给别人看的,但一定是为加强自己记忆的~)

流程基础

从上一篇得知,Android 4.1一个很大的更新是Project Butter,黄油计划,为了解决用户交互体验差的问题(Jelly Bean is crazy fast)。Project Butter对Android Display系统进行了重构,引入了三个核心元素,即VSYNC、Triple Buffer和Choreographer。

硬件加载

上上一节我们初略分析了SurfaceFlinger的启动流程,从SurfaceFlinger类的初始化流程得知,其init方法初始化了很多参数。我们分析Vsync流程需要这些信息,位于frameworks/native/services/surfaceflinger/SurfaceFlinger.cpp中。

显示设备

SurfaceFlinger中需要显示的图层(layer)将通过DisplayDevice对象传递到OpenGLES中进行合成,合成之后的图像再通过HWComposer对象传递到Framebuffer中显示。DisplayDevice对象中的成员变量mVisibleLayersSortedByZ保存了所有需要显示在本显示设备中显示的Layer对象,同时DisplayDevice对象也保存了和显示设备相关的显示方向、显示区域坐标等信息。

DisplayDevice是显示设备的抽象,定义了3中类型的显示设备。引用枚举类位于frameworks/native/services/surfaceflinger/DisplayDevice.h中,定义枚举位于hardware/libhardware/include/hardware/Hwcomposer_defs.h中:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

//DisplayDevice.h enum DisplayType { DISPLAY_ID_INVALID = -1, DISPLAY_PRIMARY = HWC_DISPLAY_PRIMARY, DISPLAY_EXTERNAL = HWC_DISPLAY_EXTERNAL, DISPLAY_VIRTUAL = HWC_DISPLAY_VIRTUAL, NUM_BUILTIN_DISPLAY_TYPES = HWC_NUM_PHYSICAL_DISPLAY_TYPES, }; //Hwcomposer_defs.h /* Display types and associated mask bits. */ enum { HWC_DISPLAY_PRIMARY = 0, HWC_DISPLAY_EXTERNAL = 1, // HDMI, DP, etc. HWC_DISPLAY_VIRTUAL = 2, HWC_NUM_PHYSICAL_DISPLAY_TYPES = 2, HWC_NUM_DISPLAY_TYPES = 3, }; |

- DISPLAY_PRIMARY:主显示设备,通常是LCD屏

- DISPLAY_EXTERNAL:扩展显示设备。通过HDMI输出的显示信号

- DISPLAY_VIRTUAL:虚拟显示设备,通过WIFI输出信号

这三种设备,第一种就是我们手机、电视的主显示屏,另外两种需要硬件扩展。

初始化显示设备模块位于SurfaceFlinger中的init函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 |

void SurfaceFlinger::init() { ...... //初始化HWComposer硬件设备,我们下面会讲到 // actual hardware composer underneath. mHwc = new HWComposer(this, *static_cast<HWComposer::EventHandler *>(this)); //初始化物理显示设备 // initialize our non-virtual displays //物理设备类型总数为2 for (size_t i=0 ; i < DisplayDevice::NUM_BUILTIN_DISPLAY_TYPES ; i++) { DisplayDevice::DisplayType type((DisplayDevice::DisplayType)i); // set-up the displays that are already connected //先调用了HWComposer的isConnected来检查显示设备是否已连接, //只有和显示设备连接的DisplayDevice对象才会被创建出来。 //即使没有任何物理显示设备被检测到, //SurfaceFlinger都需要一个DisplayDevice对象才能正常工作, //因此,DISPLAY_PRIMARY类型的DisplayDevice对象总是会被创建出来。 if (mHwc->isConnected(i) || type==DisplayDevice::DISPLAY_PRIMARY) { // All non-virtual displays are currently considered secure. bool isSecure = true; // 给显示设备分配一个token,一般都是一个binder createBuiltinDisplayLocked(type); wp<IBinder> token = mBuiltinDisplays[i]; //然后会调用createBufferQueue函数创建一个producer和consumer sp<IGraphicBufferProducer> producer; sp<IGraphicBufferConsumer> consumer; BufferQueue::createBufferQueue(&producer, &consumer, new GraphicBufferAlloc()); //然后又创建了一个FramebufferSurface对象,把consumer参数传入代表是一个消费者。 sp<FramebufferSurface> fbs = new FramebufferSurface(*mHwc, i, consumer); int32_t hwcId = allocateHwcDisplayId(type); //创建DisplayDevice对象,代表一类显示设备 sp<DisplayDevice> hw = new DisplayDevice(this, type, hwcId, mHwc->getFormat(hwcId), isSecure, token, fbs, producer, mRenderEngine->getEGLConfig()); //如果不是主屏幕 if (i > DisplayDevice::DISPLAY_PRIMARY) { // FIXME: currently we don't get blank/unblank requests // for displays other than the main display, so we always // assume a connected display is unblanked. ALOGD("marking display %zu as acquired/unblanked", i); //通常我们不会在次屏幕获得熄灭/点亮LCD的请求, //所以我们总是认为次显屏是点亮的 //设置PowerMode为HWC_POWER_MODE_NORMAL hw->setPowerMode(HWC_POWER_MODE_NORMAL); } mDisplays.add(token, hw); } } ...... } |

上述过程就是初始化显示设备,我们分部查看。

1)检查设备连接:

所有显示设备的输出都要通过HWComposer对象完成,因此上面这段代码先调用了HWComposer的isConnected来检查显示设备是否已连接,只有和显示设备连接的DisplayDevice对象才会被创建出来。即使没有任何物理显示设备被检测到,SurfaceFlinger都需要一个DisplayDevice对象才能正常工作,因此,DISPLAY_PRIMARY类型的DisplayDevice对象总是会被创建出来。

createBuiltinDisplayLocked函数就是为显示设备对象创建一个BBinder类型的Token:

|

1 2 3 4 5 6 7 8 9 10 |

void SurfaceFlinger::createBuiltinDisplayLocked(DisplayDevice::DisplayType type) { ALOGW_IF(mBuiltinDisplays[type], "Overwriting display token for display type %d", type); //一般这种token之类的东西,都是一个binder mBuiltinDisplays[type] = new BBinder(); DisplayDeviceState info(type); // All non-virtual displays are currently considered secure. info.isSecure = true; mCurrentState.displays.add(mBuiltinDisplays[type], info); } |

一般这种token之类的东西,都是一个binder,就像我们创建window需要一个token,也是new一个binder然后在WindowManagerService里注册。

2)初始化GraphicBuffer相关内容:(这一部分我们后续分析GraphicBuffer管理相关会仔细分析它,这里只是粗略介绍)

然后会调用createBufferQueue函数创建一个producer和consumer。然后又创建了一个FramebufferSurface对象。这里我们看到在新建FramebufferSurface对象时把consumer参数传入了代表是一个消费者。

而在DisplayDevice的构造函数中(下面会讲到),会创建一个Surface对象传递给底层的OpenGL ES使用,而这个Surface是一个生产者。在OpenGl ES中合成好了图像之后会将图像数据写到Surface对象中,这将触发consumer对象的onFrameAvailable函数被调用。

这就是Surface数据好了就通知消费者来拿数据做显示用,在onFrameAvailable函数汇总,通过nextBuffer获得图像数据,然后调用HWComposer对象mHwc的fbPost函数输出。

FramebufferSurface类位于frameworks/native/services/surfaceflinger/displayhardware/FramebufferSurface.cpp中,我们查看它的的onFrameAvailable函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

// Overrides ConsumerBase::onFrameAvailable(), does not call base class impl. void FramebufferSurface::onFrameAvailable() { sp<GraphicBuffer> buf; sp<Fence> acquireFence; //通过nextBuffer获得图像数据 status_t err = nextBuffer(buf, acquireFence); if (err != NO_ERROR) { ALOGE("error latching nnext FramebufferSurface buffer: %s (%d)", strerror(-err), err); return; } //调用HWComposer的fbPost函数输出图像,fbPost函数最后通过调用Gralloc模块的post函数来输出图像 err = mHwc.fbPost(mDisplayType, acquireFence, buf); if (err != NO_ERROR) { ALOGE("error posting framebuffer: %d", err); } } |

这个流程我们下几节会仔细分析,这里我们粗略过个流程:

通过nextBuffer获得图像数据;

然后调用HWComposer对象mHwc的fbPost函数输出,fbPost函数最后通过调用Gralloc模块的post函数来输出图像。这一部分我们在Android SurfaceFlinger 学习之路(一)—-Android图形显示之HAL层Gralloc模块实现分析过了,可以查看这个链接。

3)创建DisplayDevice对象,用来描述显示设备:

我们再来看看DisplayDevice的构造函数,位于frameworks/native/services/surfaceflinger/DisplayDevice.cpp中:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 |

/* * Initialize the display to the specified values. * */ DisplayDevice::DisplayDevice( const sp<SurfaceFlinger>& flinger, DisplayType type, int32_t hwcId, int format, bool isSecure, const wp<IBinder>& displayToken, const sp<DisplaySurface>& displaySurface, const sp<IGraphicBufferProducer>& producer, EGLConfig config) : lastCompositionHadVisibleLayers(false), mFlinger(flinger), mType(type), mHwcDisplayId(hwcId), mDisplayToken(displayToken), mDisplaySurface(displaySurface), mDisplay(EGL_NO_DISPLAY), mSurface(EGL_NO_SURFACE), mDisplayWidth(), mDisplayHeight(), mFormat(), mFlags(), mPageFlipCount(), mIsSecure(isSecure), mSecureLayerVisible(false), mLayerStack(NO_LAYER_STACK), mOrientation(), mPowerMode(HWC_POWER_MODE_OFF), mActiveConfig(0) { //创建surface,传递给底层的OpenGL ES使用,而这个Surface是一个生产者 mNativeWindow = new Surface(producer, false); ANativeWindow* const window = mNativeWindow.get(); /* * Create our display's surface */ //在OpenGl ES中合成好了图像之后会将图像数据写到Surface对象中 EGLSurface surface; EGLint w, h; EGLDisplay display = eglGetDisplay(EGL_DEFAULT_DISPLAY); if (config == EGL_NO_CONFIG) { config = RenderEngine::chooseEglConfig(display, format); } surface = eglCreateWindowSurface(display, config, window, NULL); eglQuerySurface(display, surface, EGL_WIDTH, &mDisplayWidth); eglQuerySurface(display, surface, EGL_HEIGHT, &mDisplayHeight); // Make sure that composition can never be stalled by a virtual display // consumer that isn't processing buffers fast enough. We have to do this // in two places: // * Here, in case the display is composed entirely by HWC. // * In makeCurrent(), using eglSwapInterval. Some EGL drivers set the // window's swap interval in eglMakeCurrent, so they'll override the // interval we set here. ////虚拟设备不支持图像合成 if (mType >= DisplayDevice::DISPLAY_VIRTUAL) window->setSwapInterval(window, 0); mConfig = config; mDisplay = display; mSurface = surface; mFormat = format; mPageFlipCount = 0; mViewport.makeInvalid(); mFrame.makeInvalid(); // virtual displays are always considered enabled //虚拟设备屏幕认为是不关闭的 mPowerMode = (mType >= DisplayDevice::DISPLAY_VIRTUAL) ? HWC_POWER_MODE_NORMAL : HWC_POWER_MODE_OFF; // Name the display. The name will be replaced shortly if the display // was created with createDisplay(). //根据显示设备类型赋名称 switch (mType) { case DISPLAY_PRIMARY: mDisplayName = "Built-in Screen"; break; case DISPLAY_EXTERNAL: mDisplayName = "HDMI Screen"; break; default: mDisplayName = "Virtual Screen"; // e.g. Overlay #n break; } // initialize the display orientation transform. //调整显示设备视角的大小、位移、旋转等参数 setProjection(DisplayState::eOrientationDefault, mViewport, mFrame); } |

DisplayDevice的构造函数初始化了一些参数,但是最重要的是:创建了一个Surface对象mNativeWindow,同时用它作为参数创建EGLSurface对象,这个EGLSurface对象是OpenGL ES中绘图需要的。

这样,在DisplayDevice中就建立了一个通向Framebuffer的通道,只要向DisplayDevice的mSurface写入数据。就会到消费者FrameBufferSurface的onFrameAvailable函数,然后到HWComposer在到Gralloc模块,最后输出到显示设备。

附(以后会讲到):DisplayDevice有个函数swapBuffers。swapBuffers函数将内部缓冲区的图像数据刷新到显示设备的Framebuffer中,它通过调用eglSwapBuffers函数来完成缓冲区刷新工作:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

void DisplayDevice::swapBuffers(HWComposer& hwc) const { // We need to call eglSwapBuffers() if: // (1) we don't have a hardware composer, or // (2) we did GLES composition this frame, and either // (a) we have framebuffer target support (not present on legacy // devices, where HWComposer::commit() handles things); or // (b) this is a virtual display if (hwc.initCheck() != NO_ERROR || (hwc.hasGlesComposition(mHwcDisplayId) && (hwc.supportsFramebufferTarget() || mType >= DISPLAY_VIRTUAL))) { EGLBoolean success = eglSwapBuffers(mDisplay, mSurface); if (!success) { EGLint error = eglGetError(); if (error == EGL_CONTEXT_LOST || mType == DisplayDevice::DISPLAY_PRIMARY) { LOG_ALWAYS_FATAL("eglSwapBuffers(%p, %p) failed with 0x%08x", mDisplay, mSurface, error); } else { ALOGE("eglSwapBuffers(%p, %p) failed with 0x%08x", mDisplay, mSurface, error); } } } status_t result = mDisplaySurface->advanceFrame(); if (result != NO_ERROR) { ALOGE("[%s] failed pushing new frame to HWC: %d", mDisplayName.string(), result); } } |

但是注意调用swapBuffers输出图像是在显示设备不支持硬件composer的情况下。

HWComposer设备

通过上一篇文章得知,Android通过VSync机制来提高显示效果,那么VSync是如何产生的?通常这个信号是由显示驱动产生,这样才能达到最佳效果。但是Android为了能运行在不支持VSync机制的设备上,也提供了软件模拟产生VSync信号的手段。

在SurfaceFlinger中,是通过HWComposer类来表示硬件设备。我们继续产看init函数:

|

1 2 3 4 5 6 7 8 |

void SurfaceFlinger::init() { ...... // Initialize the H/W composer object. There may or may not be an // actual hardware composer underneath. mHwc = new HWComposer(this, *static_cast<HWComposer::EventHandler *>(this)); ...... } |

我们继续查看HWComposer的构造函数,位于frameworks/native/services/surfaceflinger/displayhardware/HWComposer.cpp中:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 |

// --------------------------------------------------------------------------- HWComposer::HWComposer( const sp<SurfaceFlinger>& flinger, EventHandler& handler) : mFlinger(flinger), mFbDev(0), mHwc(0), mNumDisplays(1), mCBContext(new cb_context),//这里直接new了一个设备上下文对象 mEventHandler(handler), mDebugForceFakeVSync(false) { ...... bool needVSyncThread = true; // Note: some devices may insist that the FB HAL be opened before HWC. //装载FrameBuffer的硬件模块 int fberr = loadFbHalModule(); //装载HWComposer的硬件模块,这个函数中会将mHwc置为true loadHwcModule(); //如果有FB和HWC,并且也有pre-1.1 HWC,就要先关闭FB设备 //但这个是暂时的,直到HWC准备好 if (mFbDev && mHwc && hwcHasApiVersion(mHwc, HWC_DEVICE_API_VERSION_1_1)) { // close FB HAL if we don't needed it. // FIXME: this is temporary until we're not forced to open FB HAL // before HWC. framebuffer_close(mFbDev); mFbDev = NULL; } // If we have no HWC, or a pre-1.1 HWC, an FB dev is mandatory. //如果我们没有HWC硬件支持,或者之前的pre-1.1 HWC,如hwc 1.0,那么FrameBuffer就是必须的 if ((!mHwc || !hwcHasApiVersion(mHwc, HWC_DEVICE_API_VERSION_1_1)) && !mFbDev) { //如果没有framebuffer,就退出gg ALOGE("ERROR: failed to open framebuffer (%s), aborting", strerror(-fberr)); abort(); } // these display IDs are always reserved for (size_t i=0 ; i < NUM_BUILTIN_DISPLAYS ; i++) { mAllocatedDisplayIDs.markBit(i); } //硬件vsync信号 if (mHwc) { ALOGI("Using %s version %u.%u", HWC_HARDWARE_COMPOSER, (hwcApiVersion(mHwc) >> 24) & 0xff, (hwcApiVersion(mHwc) >> 16) & 0xff); if (mHwc->registerProcs) { //HWComposer设备上下文变量mCBContext赋值 mCBContext->hwc = this; //函数指针钩子函数hook_invalidate放入上下文 mCBContext->procs.invalidate = &hook_invalidate; //vsync钩子函数放入上下文 mCBContext->procs.vsync = &hook_vsync; if (hwcHasApiVersion(mHwc, HWC_DEVICE_API_VERSION_1_1)) //hotplug狗子函数放入上下文 mCBContext->procs.hotplug = &hook_hotplug; else mCBContext->procs.hotplug = NULL; memset(mCBContext->procs.zero, 0, sizeof(mCBContext->procs.zero)); //将钩子函数注册进硬件设备,硬件驱动回调这些钩子函数 mHwc->registerProcs(mHwc, &mCBContext->procs); } // don't need a vsync thread if we have a hardware composer //如果有硬件vsync信号, 则不需要软件vsync实现 needVSyncThread = false; ...... } if (mFbDev) {//如果mFbDev为不为null,情况为:没有hwc或者hwc是1.0老的版本 ALOG_ASSERT(!(mHwc && hwcHasApiVersion(mHwc, HWC_DEVICE_API_VERSION_1_1)), "should only have fbdev if no hwc or hwc is 1.0"); DisplayData& disp(mDisplayData[HWC_DISPLAY_PRIMARY]); disp.connected = true; disp.format = mFbDev->format; DisplayConfig config = DisplayConfig(); config.width = mFbDev->width; config.height = mFbDev->height; config.xdpi = mFbDev->xdpi; config.ydpi = mFbDev->ydpi; config.refresh = nsecs_t(1e9 / mFbDev->fps); disp.configs.push_back(config); disp.currentConfig = 0; } else if (mHwc) {//如果mFbDev为null,情况为:fb打开失败,或者暂时关闭(上面逻辑分析了)。打开了hwc // here we're guaranteed to have at least HWC 1.1 //这里我们至少有HWC 1.1,上面分析过了。NUM_BUILTIN_DISPLAYS 为2 for (size_t i =0 ; i < NUM_BUILTIN_DISPLAYS ; i++) { //获取显示设备相关属性 queryDisplayProperties(i); } } ...... //如果没有硬件vsync,则需要用使用软件vsync模拟 if (needVSyncThread) { // we don't have VSYNC support, we need to fake it mVSyncThread = new VSyncThread(*this); } } |

构造函数主要做了以下几件事情:

1)加载FrameBuffer硬件驱动:

调用loadFbHalModule函数实现,我们继续查看:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

// Load and prepare the FB HAL, which uses the gralloc module. Sets mFbDev. int HWComposer::loadFbHalModule() { hw_module_t const* module; //hal层封装的hal函数,参数GRALLOC_HARDWARE_MODULE_ID,加载gralloc模块 int err = hw_get_module(GRALLOC_HARDWARE_MODULE_ID, &module); if (err != 0) { ALOGE("%s module not found", GRALLOC_HARDWARE_MODULE_ID); return err; } //打开fb设备 return framebuffer_open(module, &mFbDev); } |

hw_get_module函数是HAL层框架中封装的加载gralloc的函数,这个我们之前讲过,可以查看Android SurfaceFlinger 学习之路(一)—-Android图形显示之HAL层Gralloc模块实现中的Gralloc模块的加载过程部分.

framebuffer_open函数同上,是用来打开fb设备,同样查看fb设备的打开过程模块。

2)装载HWComposer的硬件模块:

调用loadHwcModule函数来加载HWC模块,我们继续查看:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

// Load and prepare the hardware composer module. Sets mHwc. void HWComposer::loadHwcModule() { hw_module_t const* module; //同样是HAL层封装的函数,参数是HWC_HARDWARE_MODULE_ID,加载hwc模块 if (hw_get_module(HWC_HARDWARE_MODULE_ID, &module) != 0) { ALOGE("%s module not found", HWC_HARDWARE_MODULE_ID); return; } //打开hwc设备 int err = hwc_open_1(module, &mHwc); if (err) { ALOGE("%s device failed to initialize (%s)", HWC_HARDWARE_COMPOSER, strerror(-err)); return; } if (!hwcHasApiVersion(mHwc, HWC_DEVICE_API_VERSION_1_0) || hwcHeaderVersion(mHwc) < MIN_HWC_HEADER_VERSION || hwcHeaderVersion(mHwc) > HWC_HEADER_VERSION) { ALOGE("%s device version %#x unsupported, will not be used", HWC_HARDWARE_COMPOSER, mHwc->common.version); hwc_close_1(mHwc); mHwc = NULL; return; } } |

同样是借助hw_get_module这个HAL函数,不过参数是HWC_HARDWARE_MODULE_ID,加载HWC模块;然后hwc_open_1函数打开hwc设备。

3)FB和HWC相关逻辑判断(1):

如果有FB和HWC,并且也有pre-1.1 HWC,就要先关闭FB设备。这个是暂时的,直到HWC准备好;

如果我们没有HWC硬件支持,或者之前的pre-1.1 HWC,,如HWC 1.0,那么FrameBuffer就是必须的。如果脸FB都没有,那就GG。

4)硬件设备打开成功:

如果硬件设备打开成功,则将钩子函数hook_invalidate、hook_vsync和hook_hotplug注册进硬件设备,作为回调函数。这三个都是硬件产生事件信号,通知上层SurfaceFlinger的回调函数,用于处理这个信号。

因为我们本节是Vsync信号相关,所以我们只看看hook_vsync钩子函数。这里指定了vsync的回调函数是hook_vsync,如果硬件中产生了VSync信号,将通过这个函数来通知上层,看看它的代码:

|

1 2 3 4 5 6 |

void HWComposer::hook_vsync(const struct hwc_procs* procs, int disp, int64_t timestamp) { cb_context* ctx = reinterpret_cast<cb_context*>( const_cast<hwc_procs_t*>(procs)); ctx->hwc->vsync(disp, timestamp); } |

hook_vsync钩子函数会调用vsync函数,我们继续看:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

void HWComposer::vsync(int disp, int64_t timestamp) { if (uint32_t(disp) < HWC_NUM_PHYSICAL_DISPLAY_TYPES) { { Mutex::Autolock _l(mLock); // There have been reports of HWCs that signal several vsync events // with the same timestamp when turning the display off and on. This // is a bug in the HWC implementation, but filter the extra events // out here so they don't cause havoc downstream. if (timestamp == mLastHwVSync[disp]) { ALOGW("Ignoring duplicate VSYNC event from HWC (t=%" PRId64 ")", timestamp); return; } mLastHwVSync[disp] = timestamp; } char tag[16]; snprintf(tag, sizeof(tag), "HW_VSYNC_%1u", disp); ATRACE_INT(tag, ++mVSyncCounts[disp] & 1); //这里调用EventHandler类型变量mEventHandler就是SurfaceFlinger, //所以调用了SurfaceFlinger的onVSyncReceived函数 mEventHandler.onVSyncReceived(disp, timestamp); } } |

mEventHandler对象类型为EventHandler,我们在SurfaceFlinger的init函数创建HWComposer类实例时候讲SurfaceFlinger强转为EventHandler作为构造函数的参数传入其中。再者SurfaceFlinger继承HWComposer::EventHandler,所以这里没毛病,老铁~所以最终会调用SurfaceFlinger的onVSyncReceived函数。这就是硬件vsync信号的产生,关于vsync信号流程工作我们下面马上会讲到。

5)FB和HWC相关逻辑判断(2):

如果mFbDev为不为null,情况为:没有hwc或者hwc是1.0老的版本。此时就要设置一些FB的属性了,从主屏幕获取;

如果mFbDev为null,情况为:fb打开失败,或者暂时关闭(上面逻辑分析了)。仅仅打开了hwc,这里我们至少有HWC 1.1,上面分析过了。此时我们要获取显示设备相关属性,进入else if逻辑判断,里面的NUM_BUILTIN_DISPLAYS 为2,则只有主屏幕和扩展屏幕需要查询显示设备属性,调用queryDisplayProperties函数,我们可以产看这个函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 |

status_t HWComposer::queryDisplayProperties(int disp) { LOG_ALWAYS_FATAL_IF(!mHwc || !hwcHasApiVersion(mHwc, HWC_DEVICE_API_VERSION_1_1)); // use zero as default value for unspecified attributes int32_t values[NUM_DISPLAY_ATTRIBUTES - 1]; memset(values, 0, sizeof(values)); const size_t MAX_NUM_CONFIGS = 128; uint32_t configs[MAX_NUM_CONFIGS] = {0}; size_t numConfigs = MAX_NUM_CONFIGS; //hwc设备获取显屏配置信息,都是HAL层封装的函数,并保存在这些指针指向内容中 status_t err = mHwc->getDisplayConfigs(mHwc, disp, configs, &numConfigs); //如果一个不可插入的显屏没有连接,则将connected赋值为false if (err != NO_ERROR) { // this can happen if an unpluggable display is not connected mDisplayData[disp].connected = false; return err; } mDisplayData[disp].currentConfig = 0; //获取显示相关属性 for (size_t c = 0; c < numConfigs; ++c) { err = mHwc->getDisplayAttributes(mHwc, disp, configs[c], DISPLAY_ATTRIBUTES, values); if (err != NO_ERROR) { // we can't get this display's info. turn it off. mDisplayData[disp].connected = false; return err; } //获取成功后,创建一个config对象,用于保存这些属性 DisplayConfig config = DisplayConfig(); for (size_t i = 0; i < NUM_DISPLAY_ATTRIBUTES - 1; i++) { switch (DISPLAY_ATTRIBUTES[i]) { case HWC_DISPLAY_VSYNC_PERIOD://屏幕刷新率 config.refresh = nsecs_t(values[i]); break; case HWC_DISPLAY_WIDTH://屏幕宽 config.width = values[i]; break; case HWC_DISPLAY_HEIGHT://屏幕高 config.height = values[i]; break; case HWC_DISPLAY_DPI_X://DPI像素X config.xdpi = values[i] / 1000.0f; break; case HWC_DISPLAY_DPI_Y://DPI像素Y config.ydpi = values[i] / 1000.0f; break; default: ALOG_ASSERT(false, "unknown display attribute[%zu] %#x", i, DISPLAY_ATTRIBUTES[i]); break; } } //如果没有获取到DPI则给个默认的,跟进去是213或者320 if (config.xdpi == 0.0f || config.ydpi == 0.0f) { float dpi = getDefaultDensity(config.width, config.height); config.xdpi = dpi; config.ydpi = dpi; } //将每个设备的显示信息存起来 mDisplayData[disp].configs.push_back(config); } // FIXME: what should we set the format to? //像素编码都为ARGB_8888 mDisplayData[disp].format = HAL_PIXEL_FORMAT_RGBA_8888; //这里讲connected复制为true mDisplayData[disp].connected = true; return NO_ERROR; } |

queryDisplayProperties查询每个显示设备的属性,主要有以下几步:

- hwc设备获取显屏配置信息,都是HAL层封装的函数,并保存在这些指针指向内容中;

- 获取显示先关属性,并保存起来。

这一部分注释写的比较清晰,可以查看注释。

6)软件VSync信号产生:

如果硬件设备打开失败,则需要软件vsync信号,因此needVSyncThread为true,会进入最后一个if条件判断逻辑,则会创建一个VSyncThread类实例,用来模拟软件vsync信号。

所以我们看看VSyncThread这个类,它集成与Thread,又是一个线程,定义位于HWComposer.h中:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

// this class is only used to fake the VSync event on systems that don't // have it. class VSyncThread : public Thread { HWComposer& mHwc; mutable Mutex mLock; Condition mCondition; bool mEnabled; mutable nsecs_t mNextFakeVSync; nsecs_t mRefreshPeriod; virtual void onFirstRef(); virtual bool threadLoop(); public: VSyncThread(HWComposer& hwc); void setEnabled(bool enabled); }; |

构造函数位于HWComposer.cpp中:

|

1 2 3 4 5 6 |

HWComposer::VSyncThread::VSyncThread(HWComposer& hwc) : mHwc(hwc), mEnabled(false),//false mNextFakeVSync(0),//0 mRefreshPeriod(hwc.getRefreshPeriod(HWC_DISPLAY_PRIMARY))//获取主屏幕刷新率 { } |

构造函数中初始化了mEnable变量为false,mNextFakeVSync为0。还有获取了主屏幕的刷新率,并保存在mRefreshPeriod。屏幕刷新率我们在上一篇VSync信号已经科普过了。

我们看看屏幕刷新率的获取,hwc.getRefreshPeriod(HWC_DISPLAY_PRIMARY)函数:

|

1 2 3 4 |

nsecs_t HWComposer::getRefreshPeriod(int disp) const { size_t currentConfig = mDisplayData[disp].currentConfig; return mDisplayData[disp].configs[currentConfig].refresh; } |

这里获取的屏幕刷新率refresh就是我们之前queryDisplayProperties函数里获取的。其实queryDisplayProperties不止在HWComposer构造函数里调用,还会在钩子函数hook_hotplug里调用,我们查看hotplug函数:

|

1 2 3 4 5 6 7 8 9 |

void HWComposer::hotplug(int disp, int connected) { if (disp == HWC_DISPLAY_PRIMARY || disp >= VIRTUAL_DISPLAY_ID_BASE) { ALOGE("hotplug event received for invalid display: disp=%d connected=%d", disp, connected); return; } queryDisplayProperties(disp); mEventHandler.onHotplugReceived(disp, bool(connected)); } |

如果是主屏幕或者虚拟设备则不会查询设备属性。

我猜测是插入扩展屏幕后,会重新再查询一下屏幕信息,从硬件发出的信号。

好了我们继续回到上面,因为VSyncThread对象付给了一个强指针,所以会调用onFirstRef函数:

|

1 2 3 |

void HWComposer::VSyncThread::onFirstRef() { run("VSyncThread", PRIORITY_URGENT_DISPLAY + PRIORITY_MORE_FAVORABLE); } |

这样这个软件vsync产生线程就运行起来了,因此我们需要查看threadLoop函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

bool HWComposer::VSyncThread::threadLoop() { { // scope for lock Mutex::Autolock _l(mLock); while (!mEnabled) {//这个是false,在构造函数里赋值 //因此会阻塞在这里 mCondition.wait(mLock); } } const nsecs_t period = mRefreshPeriod;//屏幕刷新率 const nsecs_t now = systemTime(CLOCK_MONOTONIC);//now nsecs_t next_vsync = mNextFakeVSync;//下一次vsync时间,初始值为0 nsecs_t sleep = next_vsync - now;//距离下次vsync信号中间的睡眠时间 if (sleep < 0) {//如果sleep小于0,比如第一次进来mNextFakeVSync为0;或者错过n次vsync信号 // we missed, find where the next vsync should be //如果错过了,则重新计算下一次vsync的偏移时间 sleep = (period - ((now - next_vsync) % period)); next_vsync = now + sleep; } //偏移时间加上刷新率周期时间 mNextFakeVSync = next_vsync + period; struct timespec spec; spec.tv_sec = next_vsync / 1000000000; spec.tv_nsec = next_vsync % 1000000000; int err; do { //然后睡眠等待下次vsync信号的时间 err = clock_nanosleep(CLOCK_MONOTONIC, TIMER_ABSTIME, &spec, NULL); } while (err < 0 && errno == EINTR); if (err == 0) {//睡眠ok后,则去驱动SurfaceFlinger函数触发 mHwc.mEventHandler.onVSyncReceived(0, next_vsync); } return true; } |

上面的代码,我们需要注意两个地方:

- mEnabled默认为false,mCondition在这里阻塞,直到有人调用了signal(),同时mEnabled为true;

- 如果阻塞解开,则会定期休眠,然后去驱动SF,这就相当于产生了持续的Vsync信号。

这个函数会间隔模拟产生VSync的信号的原理是在固定时间发送消息给HWCompoer的消息对象mEventHandler,这个其实就到SurfaceFlinger的onVSyncReceived函数了。用软件模拟VSync信号在系统比较忙的时候可能会丢失一些信号,所以才有中间的sleep小于0的情况。

到目前为止,HWC中新建了一个线程VSyncThread,阻塞中,下面我们看下打开Vsync开关的闸刀是如何建立的,以及何处去开闸刀。

VSync 信号闸刀控制

上面我分析了Vsync软件和硬件信号的产生过程,但是HWC中新建的线程VSyncThread是处于阻塞当中,因此我们需要一个闸刀去控制这个开关。

Vsync信号开关

这个闸刀还是在SurfaceFlinger的init函数中建立的,我们查看这个位置:

|

1 2 3 4 5 6 7 |

void SurfaceFlinger::init() { ...... mEventControlThread = new EventControlThread(this); mEventControlThread->run("EventControl", PRIORITY_URGENT_DISPLAY); ...... } |

这是一个Thread线程类,因此都会实现threadLoop函数。不过我们先看看它的构造函数:

|

1 2 3 4 |

EventControlThread::EventControlThread(const sp<SurfaceFlinger>& flinger): mFlinger(flinger), mVsyncEnabled(false) { } |

比较简单,将SurfaceFlinger对象传了进来,并且将mVsyncEnabled标志初始为false。

然后我们看看他的threadLoop线程函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

bool EventControlThread::threadLoop() { Mutex::Autolock lock(mMutex); bool vsyncEnabled = mVsyncEnabled;//初始为false //先调用一遍SurfaceFlinger的eventControl函数 mFlinger->eventControl(HWC_DISPLAY_PRIMARY, SurfaceFlinger::EVENT_VSYNC, mVsyncEnabled); //这里是个死循环 while (true) { status_t err = mCond.wait(mMutex);//会阻塞在这里 if (err != NO_ERROR) { ALOGE("error waiting for new events: %s (%d)", strerror(-err), err); return false; } //vsyncEnabled 开始和mVsyncEnabled都为false,如果有其他地方改变了mVsyncEnabled, //会去调用SF的eventControl函数 if (vsyncEnabled != mVsyncEnabled) { mFlinger->eventControl(HWC_DISPLAY_PRIMARY, SurfaceFlinger::EVENT_VSYNC, mVsyncEnabled); vsyncEnabled = mVsyncEnabled; } } return false; } |

threadLoop函数主要逻辑分两步:

1.进来时候在mCond处阻塞,可以看到外面是个死循环,所以如果有其他地方对这个mCond调用了signal,执行完下面的代码又会阻塞

2.解除阻塞后,如果vsyncEnabled != mVsyncEnabled,也就是开关状态不同,由开到关和由关到开,都回去调用mFlinger->eventControl,调用完成后把这次的开关状态保存,vsyncEnabled = mVsyncEnabled;,与下次做比较。

从上面我们能明显认识到EventControlThread线程,其实就起到了个闸刀的作用,等待着别人去开、关。

所以这个开关操作就在SurfaceFlinger的eventControl函数,我们看看它怎么实现:

|

1 2 3 4 |

void SurfaceFlinger::eventControl(int disp, int event, int enabled) { ATRACE_CALL(); getHwComposer().eventControl(disp, event, enabled); } |

它又会调用HWComposer的eventControl函数,我们继续查看:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

void HWComposer::eventControl(int disp, int event, int enabled) { //如果是没有申请注册的设备,GG if (uint32_t(disp)>31 || !mAllocatedDisplayIDs.hasBit(disp)) { ALOGD("eventControl ignoring event %d on unallocated disp %d (en=%d)", event, disp, enabled); return; } //不是EVENT_VSYNC事件,GG if (event != EVENT_VSYNC) { ALOGW("eventControl got unexpected event %d (disp=%d en=%d)", event, disp, enabled); return; } status_t err = NO_ERROR; //如果是硬件Vsync闸刀,并且不是调试软件vsync if (mHwc && !mDebugForceFakeVSync) { // NOTE: we use our own internal lock here because we have to call // into the HWC with the lock held, and we want to make sure // that even if HWC blocks (which it shouldn't), it won't // affect other threads. Mutex::Autolock _l(mEventControlLock); const int32_t eventBit = 1UL << event; const int32_t newValue = enabled ? eventBit : 0; const int32_t oldValue = mDisplayData[disp].events & eventBit; if (newValue != oldValue) { ATRACE_CALL(); //硬件vsync闸刀控制开关 err = mHwc->eventControl(mHwc, disp, event, enabled); if (!err) { int32_t& events(mDisplayData[disp].events); events = (events & ~eventBit) | newValue; char tag[16]; snprintf(tag, sizeof(tag), "HW_VSYNC_ON_%1u", disp); ATRACE_INT(tag, enabled); } } // error here should not happen -- not sure what we should // do if it does. ALOGE_IF(err, "eventControl(%d, %d) failed %s", event, enabled, strerror(-err)); } //如果没有硬件Vsync支持,则调用软件vsync信号闸刀 if (err == NO_ERROR && mVSyncThread != NULL) { mVSyncThread->setEnabled(enabled); } } |

这里可以看到还是分软件闸刀和硬件闸刀:

- 硬件闸刀控制就到了HAL层函数了,调用了mHwc->eventControl(mHwc, disp, event, enabled)这个逻辑;

- 如果没有硬件Vsync支持,则会调用软件闸刀控制手段,VsyncThread的setEnable函数。

硬件就不用了看,反正也看不到代码。。。我们看看软件Vsync闸刀控制,即VsyncThread的setEnable函数:

|

1 2 3 4 5 6 7 |

void HWComposer::VSyncThread::setEnabled(bool enabled) { Mutex::Autolock _l(mLock); if (mEnabled != enabled) { mEnabled = enabled; mCondition.signal(); } } |

将软件模拟线程VSyncThread的mCondition释放,这时候VSyncThread就会定期产生“信号”,去驱动SF了。

EventControlThread开关

但是我们上边漏了东西,就是EventControlThread本身的闸刀,它自己还在mCond.wait(mMutex)这里阻塞呢。那么EventControlThread这个闸刀到底在哪儿打开呢?

所以我们还得的查看SurfaceFlinger的init函数,再这里它有一处逻辑:

|

1 2 3 4 5 6 7 8 |

void SurfaceFlinger::init() { ...... // set initial conditions (e.g. unblank default device) initializeDisplays(); ...... } |

我们查看initializeDisplays函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

void SurfaceFlinger::initializeDisplays() { class MessageScreenInitialized : public MessageBase { SurfaceFlinger* flinger; public: MessageScreenInitialized(SurfaceFlinger* flinger) : flinger(flinger) { } virtual bool handler() { flinger->onInitializeDisplays(); return true; } }; sp<MessageBase> msg = new MessageScreenInitialized(this); postMessageAsync(msg); // we may be called from main thread, use async message } |

这里放了一个消息给SF的MessageQueue,然后消息回调onInitializeDisplays函数,我们继续查看:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

void SurfaceFlinger::onInitializeDisplays() { // reset screen orientation and use primary layer stack //重置屏幕方向,并使用主屏幕图层 Vector<ComposerState> state; Vector<DisplayState> displays; DisplayState d; d.what = DisplayState::eDisplayProjectionChanged | DisplayState::eLayerStackChanged; d.token = mBuiltinDisplays[DisplayDevice::DISPLAY_PRIMARY]; d.layerStack = 0; d.orientation = DisplayState::eOrientationDefault; d.frame.makeInvalid(); d.viewport.makeInvalid(); d.width = 0; d.height = 0; displays.add(d); //重设屏幕Transaction状态 setTransactionState(state, displays, 0); //我们要找到闸刀开关在这个里面 setPowerModeInternal(getDisplayDevice(d.token), HWC_POWER_MODE_NORMAL); //主显示器刷新率 const nsecs_t period = getHwComposer().getRefreshPeriod(HWC_DISPLAY_PRIMARY); mAnimFrameTracker.setDisplayRefreshPeriod(period); } |

其它都不重要,重要在setPowerModeInternal函数,我们继续查看:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

void SurfaceFlinger::setPowerModeInternal(const sp<DisplayDevice>& hw, int mode) {//HWC_POWER_MODE_NORMAL ALOGD("Set power mode=%d, type=%d flinger=%p", mode, hw->getDisplayType(), this); int32_t type = hw->getDisplayType();//DISPLAY_PRIMARY int currentMode = hw->getPowerMode();//HWC_POWER_MODE_OFF if (mode == currentMode) {//skip ALOGD("Screen type=%d is already mode=%d", hw->getDisplayType(), mode); return; } hw->setPowerMode(mode); if (type >= DisplayDevice::NUM_BUILTIN_DISPLAY_TYPES) { ALOGW("Trying to set power mode for virtual display"); return; } if (currentMode == HWC_POWER_MODE_OFF) {//会走到这里 getHwComposer().setPowerMode(type, mode); if (type == DisplayDevice::DISPLAY_PRIMARY) { // FIXME: eventthread only knows about the main display right now //EventThread是后面讲到VSync工作流程的部分,后面讲,这里影响不大 mEventThread->onScreenAcquired(); //这里才是重点,是打开闸刀的地方 resyncToHardwareVsync(true); } mVisibleRegionsDirty = true; repaintEverything(); } else if (mode == HWC_POWER_MODE_OFF) {//不会到这里 if (type == DisplayDevice::DISPLAY_PRIMARY) { disableHardwareVsync(true); // also cancels any in-progress resync // FIXME: eventthread only knows about the main display right now mEventThread->onScreenReleased(); } getHwComposer().setPowerMode(type, mode); mVisibleRegionsDirty = true; // from this point on, SF will stop drawing on this display } else {//也不会到这儿 getHwComposer().setPowerMode(type, mode); } } |

上面逻辑也不难,只要我们记住以前赋值的变量时多少就行了。

我们获取主显屏的信息,因为是灭的HWC_POWER_MODE_OFF模式,所以进入这个if判断里面,然后调用resyncToHardwareVsync函数。我们继续查看它:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

void SurfaceFlinger::resyncToHardwareVsync(bool makeAvailable) {//true Mutex::Autolock _l(mHWVsyncLock); //true if (makeAvailable) { mHWVsyncAvailable = true; } else if (!mHWVsyncAvailable) { ALOGE("resyncToHardwareVsync called when HW vsync unavailable"); return; } //主屏幕硬件刷新率 const nsecs_t period = getHwComposer().getRefreshPeriod(HWC_DISPLAY_PRIMARY); //设置硬件刷新率 mPrimaryDispSync.reset(); mPrimaryDispSync.setPeriod(period); if (!mPrimaryHWVsyncEnabled) {//false,进入 mPrimaryDispSync.beginResync(); //eventControl(HWC_DISPLAY_PRIMARY, SurfaceFlinger::EVENT_VSYNC, true); //打开闸刀 mEventControlThread->setVsyncEnabled(true); mPrimaryHWVsyncEnabled = true; } } |

先将mHWVsyncAvailable 标志位置为true,然后设置硬件刷新周期,最后打开mEventControlThread闸刀,我们可以查看它的setVsyncEnabled函数:

|

1 2 3 4 5 |

void EventControlThread::setVsyncEnabled(bool enabled) { Mutex::Autolock lock(mMutex); mVsyncEnabled = enabled; mCond.signal(); } |

可以看到,在这里释放了前面的mCond,让EventControlThread去调用mFlinger->eventControl()函数,从而去HWC中打开Vsync开关。

VSync信号构成

本来我们应该继续顺着代码去跟踪vsync信号的分发过程,但是在这之前我们有必要了解一下vsync信号的构成,以便我们后续分析没有盲点。

我们知道Android是用Vsync来驱动系统的画面更新包括APPview draw ,surfaceflinger 画面的合成,display把surfaceflinger合成的画面呈现在LCD上。要分析Vsync信号的组成,可以了解一下systrace工具。

Systrace简介

Systrace是Android4.1中新增的性能数据采样和分析工具。它可帮助开发者收集Android关键子系统(如surfaceflinger、WindowManagerService等Framework部分关键模块、服务,View系统等)的运行信息,从而帮助开发者更直观的分析系统瓶颈,改进性能。

systrace的使用可以查看官网,或者问娘问哥。

官网使用教程如下:

https://developer.android.com/studio/profile/systrace-walkthru.html

命令行使用方式:

https://developer.android.com/studio/profile/systrace-commandline.html

生成html分析:

https://developer.android.com/studio/profile/systrace.html

分析systrace生成文件,要在eng版本rom,其他的要么无法生成,要么没有权限。

下面我抄一个例子分析。

VSync的构成

就挑一个相册这个应用,是系统自带的system app,包名是com.android.gallery3d。

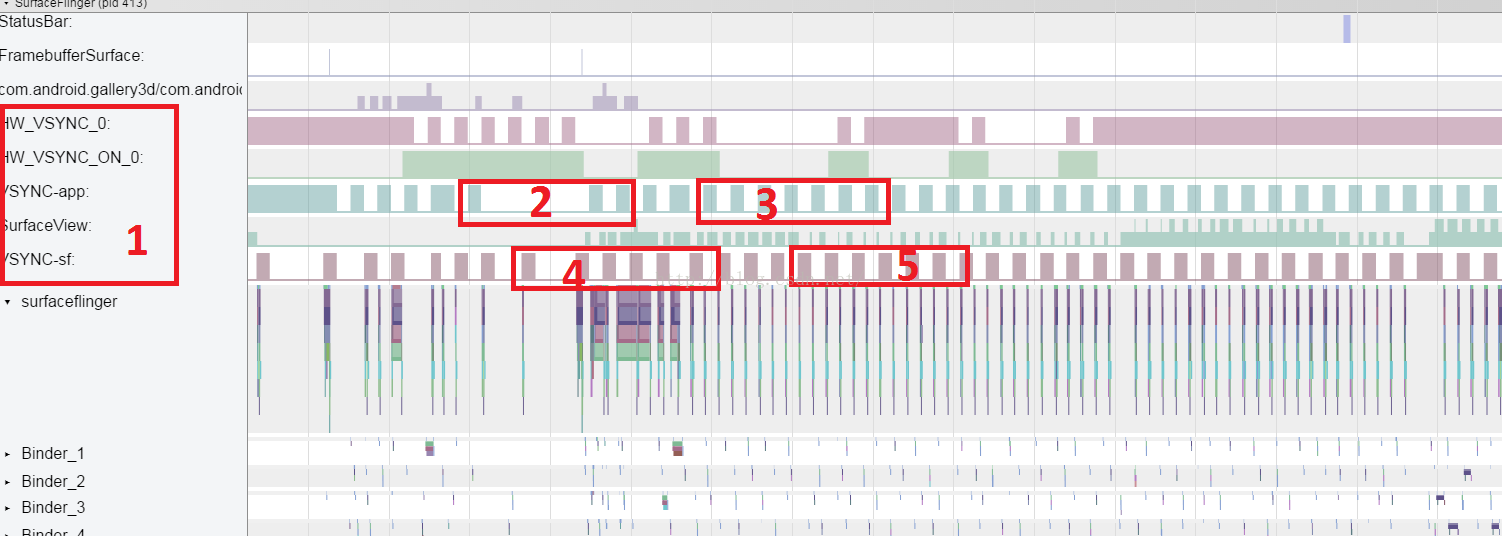

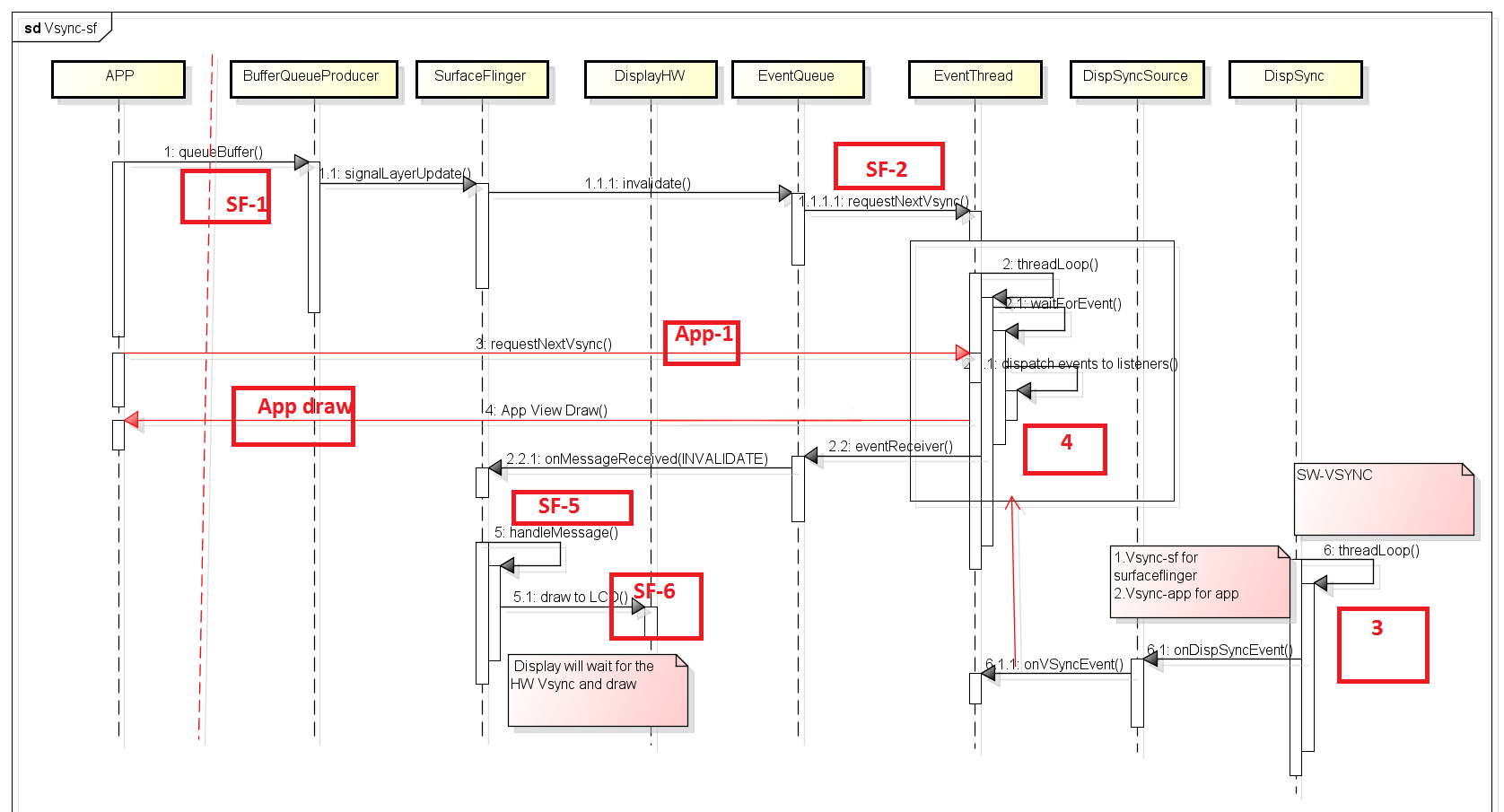

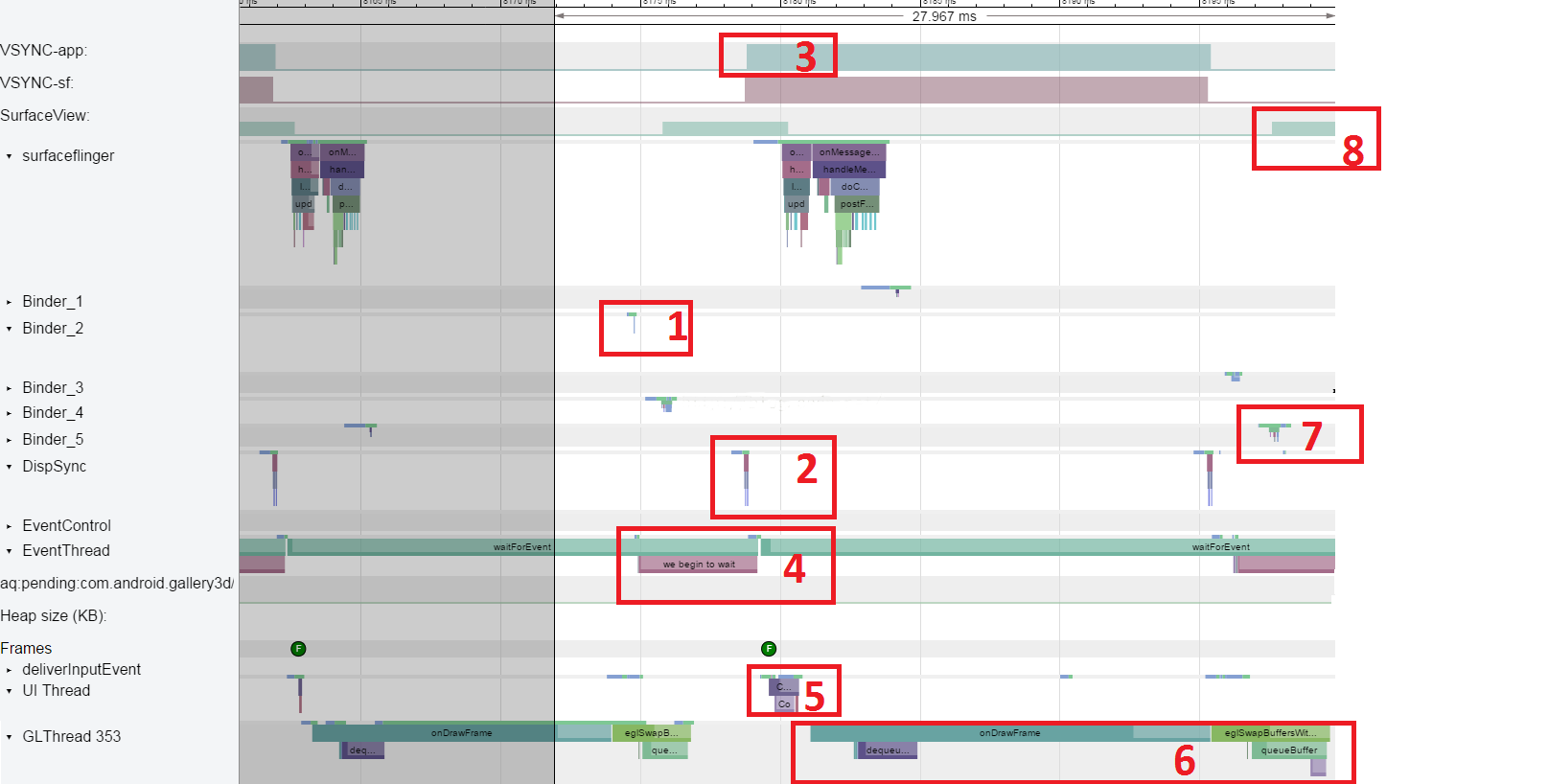

在systrace中,我们经常可以看到如上图的信息。

- 红色框1是与Vsync相关event信息.这些Vsync event构成了android系统画面更新基础;

- 红色框2和红色框3是Vsync-app的信息.我们可以看到红色框2比红色框3稀疏.我们将会在本文说明其原因;

- 红色框4和红色框5是Vsync-sf的信息.我们可以看到红色框4比红色框5稀疏.我们将会在本文说明其原因。

从上图可以看出来,VSync信号由HW_VSYNC_ON_0,HW_VSYNC_0, Vsync-app和Vsync-sf四部分构成:

- HW_VSYNC_ON_0:代表PrimaryDisplay的VSync被enable或disable。0这个数字代表的是display的编号, 0是PrimaryDisplay,如果是Externalmonitor,就会是HW_VSYNC_ON_1。当SF要求HWComposer将Display的VSync打开或关掉时,这个event就会记录下来;

- HW_VSYNC_0:代表PrimaryDisplay的VSync发生时间点, 0同样代表display编号。其用来调节Vsync-app和Vsync-sfevent的输出;

- Vsync-app:App,SystemUI和systemserver 等viewdraw的触发器;

- Vsync-sf:Surfaceflinger合成画面的触发器。

通常为了避免Tearing的现象,画面更新(Flip)的动作通常会在VSync开始的时候才做,因为在VSync开始到它结束前,Display不会把framebuffer资料显示在display上,所以在这段时间做Flip可以避免使用者同时看到前后两个部份画面的现象。目前user看到画面呈现的过程是这样的,app更新它的画面后,它需要透过BufferQueue通知SF,SF再将更新过的app画面与其它的App或SystemUI组合后,再显示在User面前。在这个过程里,有3个component牵涉进来,分别是App、SF、与Display。以目前Android的设计,这三个Component都是在VSync发生的时候才开始做事。我们将它们想成一个有3个stage的pipeline,这个pipeline的clock就LCD的TE信号(60HZ)也即HW_VSYNC_0。

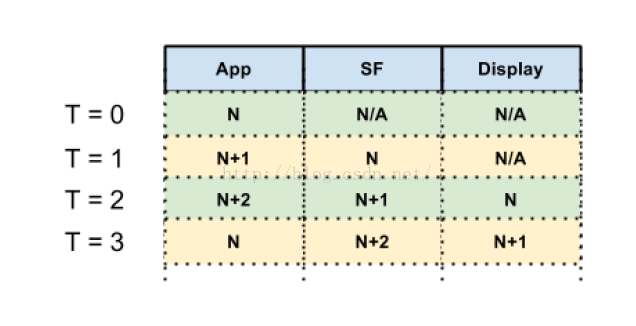

我们来看看android draw的pipeline,如下:

- T = 0时, App正在画N, SF与Display都没内容可用

- T = 1时, App正在画N+1, SF组合N, Display没Buffer可显示

- T = 2时, App正在画N+2, SF组合N+1, Display显示N

- T = 3时, App正在画N, SF组合N+2, Display显示N+1

- ……

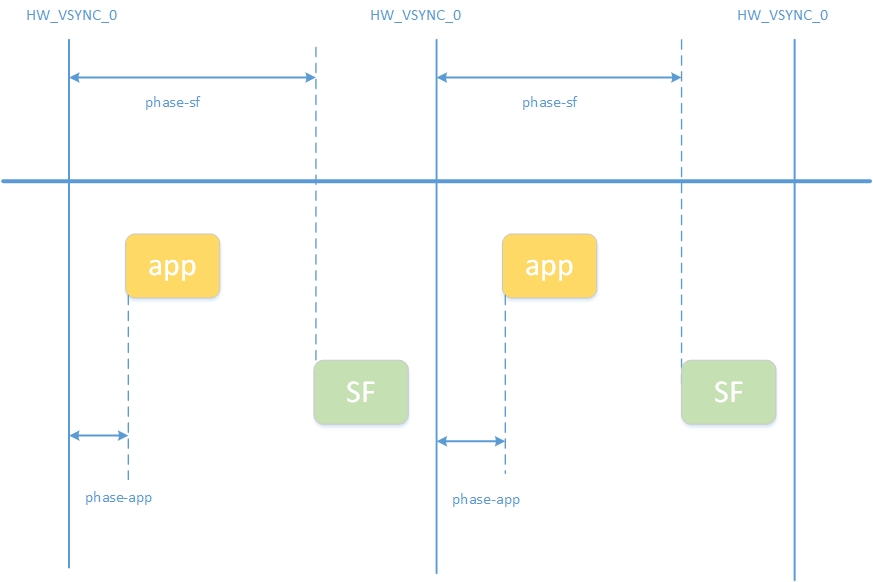

如果按照这个步骤,当user改变一个画面时,要等到2个VSync后,画面才会显示在user面前,latency大概是33ms (2个frame的时间)。但是对大部份的操作来讲,可能app加SF画的时间一个frame(16.6ms)就可以完成。因此,Android就从HW_VSYNC_0中产生出两个VSync信号,VSYNC-app是给App用的,VSYNC-sf是给SF用的, Display则是使用HW_VSYNC_0。VSYNC-app与VSYNC-sf可以分别给定一个phase,简单的说:

VSYNC-app = HW_VSYNC_0 + phase_app

VSYNC-sf =HW_VSYNC_0 + phase_sf

从而使App draw和surfaceflinger的合成,错开时间运行。这样就有可能整个系统draw的pipeline更加有效率,从而提高用户体验。

也就是说,如果phase_app与phase_sf设定的好的话,可能大部份user使用的状况, App+SF可以在一个frame里完成,然后在下一个HW_VSYNC_0来的时候,显示在display上。

理论上透过VSYNC-sf与VSYNC-app的确是可以有机会在一个frame里把App+SF做完,但是实际上不是一定可以在一个frame里完成。因为有可能因为CPU调度,GPUperformance不够,以致App或SF没办法及时做完。但是即便如此,把app,surfaceflinger和displayVsync分开也比用一个Vsync来trigger appdraw,surfaceflinger合成,display在LCD上draw的性能要好很多(FPS更高)。因为如果放在同一时间,CPU调度压力更大,可能会卡顿、延迟。

那么是否我们收到LCD的Vsync event就会触发Vsync-app和Vsync-SF呢?如果是这样我们就不会看到本文开头的Vsync-app和Vsync-SF的节奏不一致的情况(红色框2比红色框3稀疏)。事实上,在大多数情况下,APP的画面并不需要一直更新。比如我们看一篇文章,大部份时间,画面是维持一样的,如果我们在每个LCD的VSYNC来的时候都触发SF或APP,就会浪费时间和Power。可是在画面持续更新的情况下,我们又需要触发SF和App的Vsync event。例如玩game或播影片时。就是说我们需要根据是否有内容的更新来选择Vsync event出现的机制。

Vsync event 的触发需要按需出现。所以在Android里,HW_VSYNC_0,Vsync-sf和Vsync-app都会按需出现。HW_VSYNC_0是根据是否需要调节sf和app的Vsync event而出现,而SF和App则会call requestNextVsync()来告诉系统我要在下一个VSYNC需要被trigger。也是虽然系统每秒有60个HW VSYNC,但不代表APP和SF在每个VSYNC都要更新画面。因此,在Android里,是根据SoftwareVSYNC(Vsync-sf,Vsync-app)来更新画面。Software VSYNC是根据HWVSYNC过去发生的时间,推测未来会发生的时间。因此,当APP或SF利用requestNextVsync时,Software VSYNC才会触发VSYNC-sf或VSYNC-app。

下面我们就继续跟着代码,分析VSync信号工作流程。

VSync工作流程

整体概述

我们知道Vsync是android display系统的重要基石,其驱动android display系统不断的更新App侧的绘画,并把相关内容及时的更新到LCD上.其包含的主要代码如下:

|

1 2 3 4 5 6 7 |

frameworks\native\services\surfaceflinger\DispSync.cpp frameworks\native\services\surfaceflinger\SurfaceFlinger.cpp frameworks\native\services\surfaceflinger\SurfaceFlinger.cpp$DispSyncSource frameworks\native\services\surfaceflinger\EventThread.cpp frameworks\native\services\surfaceflinger\MessageQueue.cpp frameworks\native\libs\gui\BitTube.cpp frameworks\native\libs\gui\BufferQueueProducer.cpp |

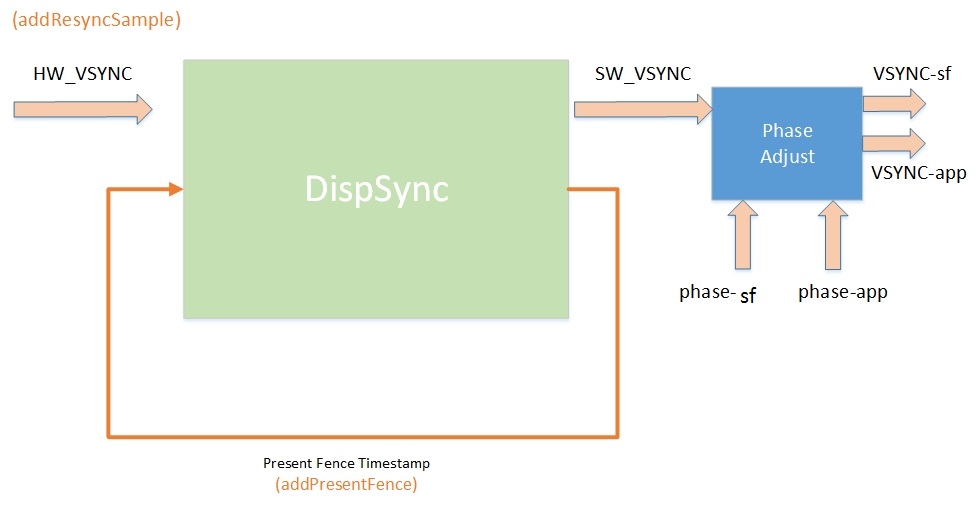

- DispSync.cpp:这个class包含了DispSyncThread,是SW-SYNC的心脏,所有的SW-SYNCevent均由其产生。在Android系统中,只有一个DispSync;

- SurfaceFlinger.cpp:这个类主要处理layer的合成,它合成好相关的layer后发送command给HW display进行进行显示;

- DispSyncSource:Vsync source 在Android系统中有两个instance,一是Vsync-app,另一个Vsync-sf。当SW-SYNC发生时,Vsyncsource会callback到其相应的EventThread,并且会在Systrace上显示出Vsync-sf和Vsync-app的跳变;

- EventThread.cpp:Vsync event处理线程,在系统中有两个EventThread,一个用于Vsync-app,另一个用于Vsync-sf。其记录App和SurfaceFlinger的requestnextVsync()请求,当SW-VSYNCevent触发时,EventThread线程通过BitTube通知App或SurfaceFlinger,App开始drawview,SurfaceFlinger 开始合成 dirty layer;

- MessageQueue.cpp:MessageQueue 主要处理surfaceflinger的Vsync请求和发生Vsync事件给surfaceFlinger;

- BitTube.cpp:Vsync 事件的传输通道。App或Surfaceflinger首先与EventThread建立DisplayEventConnection(EventThread::Connection::Connection,Connection 是BnDisplayEventConnection子类,BitTube是其dataChannel)。App或surfaceFlinger通过call DisplayEventConnection::requestNextVsync()(binder 通信)向EventThread请求Vsync event,当EventThread收到SW-VSYNCevent时,其通过BitTube把Vsync evnet发送给App或SufaceFlinger;

- BufferQueueProducer.cpp:在Vsync架构中,其主要作用是向EventThread请求Vsync-sfevent。当App画完一个frame后,其会把画完的buffer放到bufferqueue中通过call BufferQueueProducer::queueBuffer(),进而surfaceflinger进程会通过call DisplayEventConnection:: requestNextVsync()向EventThread请求Vsync event。

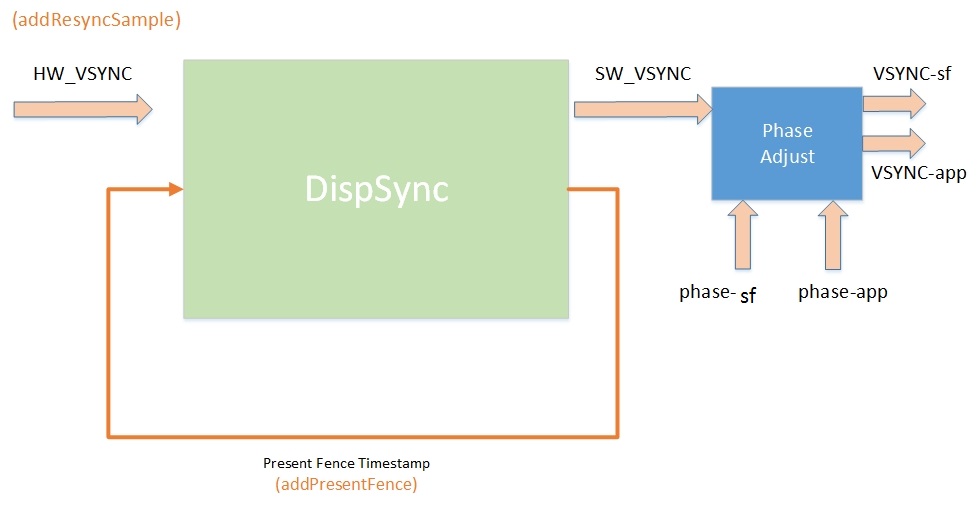

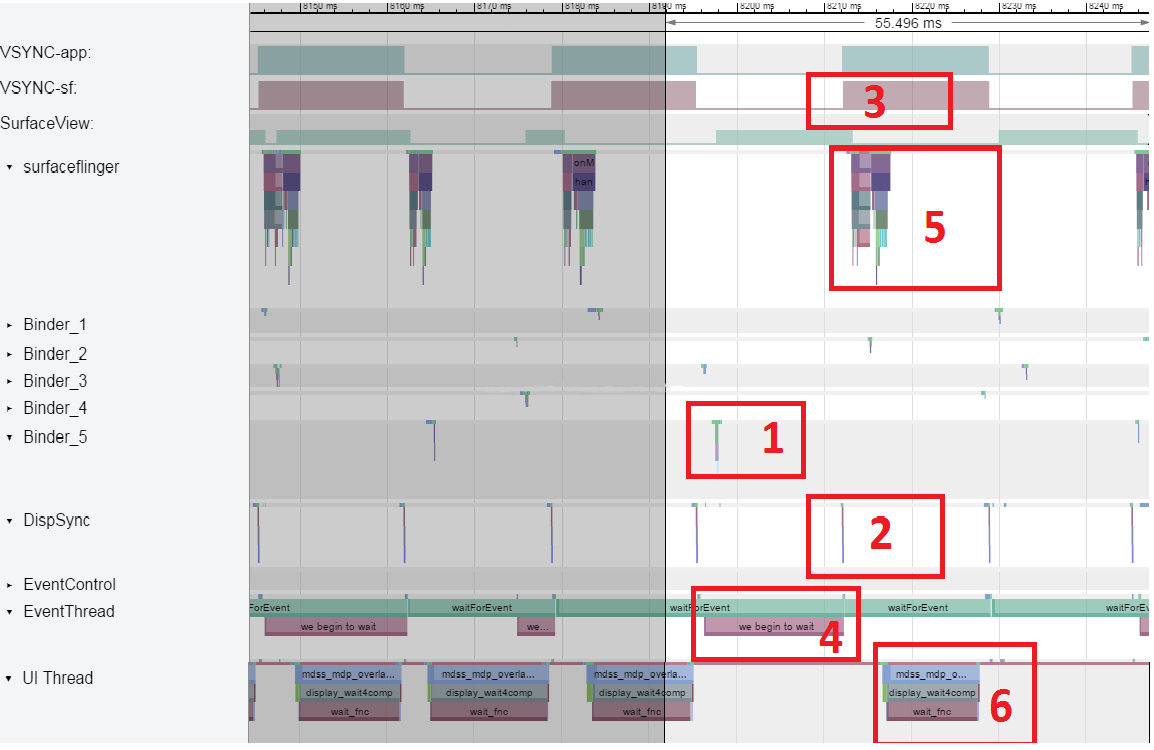

Vsyncevent产生的示意图如下:

App需要draw一个frame时,其会向EventThread(appEventThread)请求Vysncevent,当EventThread收到Vsync event时,EventThread通过BitTueb把Vsync event通知到App,同时跳变systrace中的Vsync-app。App收到Vsync-app,开始draw frame。当App画完一个frame后,把画好的内容放到bufferqueue中,就会要求一个Vsync-sf event,以便surfaceflinger在收到Vsync-sf event时合成相关的dirty的内容并通知DisplayHW。Display HW 会在下一个HWvsync时间点,把相关的内容更新到LCD上完成内容的更新。

下面是其大概的流程图:

- (SF-1)APP 画完一个frame以后,就会把其绘画的buffer放到buffer queue中.从生产者和消费者的关系来看,App是生产者,surfaceflinger进程是消费者.

- (SF-2)在SurfaceFlinger 进程内,当收到有更新的可用的frame时(需要render到LCD ),就会向EventThread请求Vsync event(requestNextVsync()).EventThread会记录下此请求,当下次Vsync event到来时,便会triggerVsync-sf event.

- DispSync 不断产生SW-VSYNCEVENT.当SW-VSYNCEVENT产生时,会检查是否有EventListener关心该event(SF和APP请求Vsync 时会在DispSync中创建EventListener并把其相应的DispSyncSource作为EventListener的callback),如有则调用EventListenercallback(DispSyncSource)的onDispSyncEvent.(callbacks[i].mCallback->onDispSyncEvent(callbacks[i].mEventTime)).

- DispSyncSource会引起Vsync-sf或Vsync-app跳变,当onDispSyncEvent()被调用时,并且会直接调用EventThread的onVSyncEvent().

- EventThread会把Vsync-sf或Vsync-app event通知到App或surfaceFlinger当Vsync-sfevent产生时(callonDispSyncEvent),surfaceflinger进程合成dirty layer的内容(SF-5)并通知Display HW把相关的更新到LCD上.App则在Vsync-ap时开始drawview.

- Display HW 便会在HW 的vsync 到来时,更新LCD 的内容(SF-6).

- 如果有Layer 的内容需要更新,surfaceflinger 便会把相关内容合成在一起,并且通知DisplayHW ,有相关的更新内容.

程序实现

我们来看看其代码的实现,继续回到SurfaceFlinger.cpp中的init函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

void SurfaceFlinger::init() { ...... // start the EventThread sp<VSyncSource> vsyncSrc = new DispSyncSource(&mPrimaryDispSync, vsyncPhaseOffsetNs, true, "app"); mEventThread = new EventThread(vsyncSrc); sp<VSyncSource> sfVsyncSrc = new DispSyncSource(&mPrimaryDispSync, sfVsyncPhaseOffsetNs, true, "sf"); mSFEventThread = new EventThread(sfVsyncSrc); mEventQueue.setEventThread(mSFEventThread); mEventControlThread = new EventControlThread(this); mEventControlThread->run("EventControl", PRIORITY_URGENT_DISPLAY); // set a fake vsync period if there is no HWComposer if (mHwc->initCheck() != NO_ERROR) { mPrimaryDispSync.setPeriod(16666667); } ...... } |

当surfaceFlinger初始化时,其创建了两个DispSyncSource和两个EventThread,一个用于APP,而另一个用于SurfaceFlinger本身。我们知道DispSyncSource和DispSync协同工作,共同完成Vsyncevent的fire。而EventThread会trigger App draw frame和surfaceFlinger合成”dirty” layer在SW-VSYNCevent产生时。

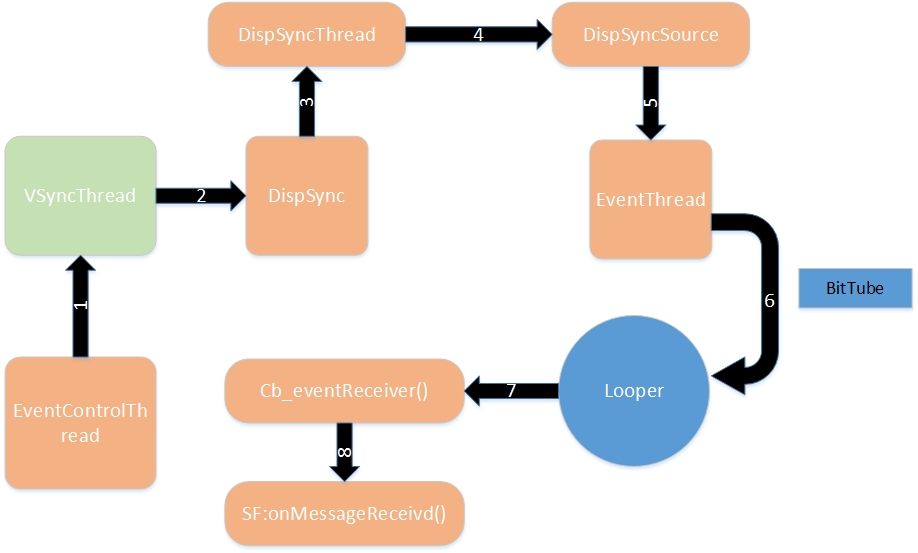

上面图形是Vsync信号从产生到驱动SF去工作的一个过程,其中绿色部分是HWC中的Vsync信号软件模拟进程,其他都是SF中的:

其中包含4个线程,EventControlThread,就像是Vsync信号产生的闸刀,当然闸刀肯定需要人去打开和关闭,这个人就是SF;

VsyncThread,下面的代码只介绍软件模拟的Vsync信号,这个线程主要工作就是循环定期产生信号,然后调用SF中的函数,这就相当于触发了;

DispSyncThread,是Vsync信号的模型,VsyncThread首先触发DispSyncThread,然后DispSyncThread再去驱动其他事件,它就是Vsync在SF中的代表;

EventThread,具体的事件线程,由DispSyncThread去驱动。

我们接下来根据Vsync-sf先关分析一下流程

DispSync和DispSyncThread

从上面SurfaceFlinger的init函数中,在构造DispSyncSource会传入一个mPrimaryDispSync的引用,它是SF类中有一个field为DispSync mPrimaryDispSync,因此在SF类创建时会在栈上生成这个对象。我们首先看看这个类的构造函数:

|

1 2 3 4 5 6 7 8 9 10 11 |

DispSync::DispSync() : mRefreshSkipCount(0), mThread(new DispSyncThread()) { //创建DispSyncThread线程,并运行 mThread->run("DispSync", PRIORITY_URGENT_DISPLAY + PRIORITY_MORE_FAVORABLE); //清除一些变量为0 reset(); beginResync(); ...... } |

在DispSync 构造函数中,创建了DispSyncThread线程,并运行。DispSyncThread实现也位于DispSync.cpp中:

|

1 2 3 4 5 6 |

DispSyncThread(): mStop(false), mPeriod(0), mPhase(0), mWakeupLatency(0) { } |

这里面将mStop设置为false,mPeriod设置为0,还有其他的也置为0。因为DispSyncThread是Thread派生的,所以我们需要看看它的线程函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 |

virtual bool threadLoop() { status_t err; nsecs_t now = systemTime(SYSTEM_TIME_MONOTONIC); nsecs_t nextEventTime = 0; while (true) { Vector<CallbackInvocation> callbackInvocations; nsecs_t targetTime = 0; { // Scope for lock Mutex::Autolock lock(mMutex); if (mStop) {//false return false; } if (mPeriod == 0) {//0 //mCond阻塞,当signal,同时mPeriod 不为0时,继续往下执行, err = mCond.wait(mMutex); if (err != NO_ERROR) { ALOGE("error waiting for new events: %s (%d)", strerror(-err), err); return false; } continue; } //计算下一次vsync信号时间时间 nextEventTime = computeNextEventTimeLocked(now); targetTime = nextEventTime; bool isWakeup = false; //如果还没到下一次vsync时间,则等待中间间隔时间 if (now < targetTime) { err = mCond.waitRelative(mMutex, targetTime - now); //等待超时,则唤醒 if (err == TIMED_OUT) { isWakeup = true; } else if (err != NO_ERROR) { ALOGE("error waiting for next event: %s (%d)", strerror(-err), err); return false; } } now = systemTime(SYSTEM_TIME_MONOTONIC); //唤醒触发 if (isWakeup) { //如果唤醒了,则计算Latency,如果Latency大于500000则强制Latency为500000 mWakeupLatency = ((mWakeupLatency * 63) + (now - targetTime)) / 64; if (mWakeupLatency > 500000) { // Don't correct by more than 500 us mWakeupLatency = 500000; } if (kTraceDetailedInfo) { ATRACE_INT64("DispSync:WakeupLat", now - nextEventTime); ATRACE_INT64("DispSync:AvgWakeupLat", mWakeupLatency); } } //gatherCallbackInvocationsLocked函数获取本次VSync信号的回调函数列表 //些回调函数是通过DispSync类的addEventListener函数加入的 callbackInvocations = gatherCallbackInvocationsLocked(now); } //接着就调用fireCallbackInvocations来依次调用列表中所有对象的onDispSyncEvent函数 if (callbackInvocations.size() > 0) { fireCallbackInvocations(callbackInvocations); } } return false; } |

DispSyncThread的threadLoop函数,主要这个函数比较计算时间来决定是否要发送信号。主要工作如下:

- 模型线程DispSyncThread阻塞在mCond,等待别人给mPeriod 赋值和signal;

- gatherCallbackInvocationsLocked函数获取本次VSync信号的回调函数列表,这些回调函数是通过DispSync类的addEventListener函数加入的;

- 接着就调用fireCallbackInvocations来依次调用列表中所有对象的onDispSyncEvent函数。

新建的DispSyncThread线程,目前被阻塞,先不管,我们先看模型DispSync和要驱动的事件(DispSyncSource,EventThread等)是如何联系起来的。

DispSyncSource和EventThread

在SF的init函数中,有如下代码,涉及到了DispSync,DispSyncSource,EventThread和mEventQueue的纠缠关系。我们可以看到在驱动事件DispSyncSource的构造中,我们输入了模型DispSync,这样就为回调创造了机会,下面看具体如何实现的。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

void SurfaceFlinger::init() { ...... // start the EventThread //把模型mPrimaryDispSync(DispSync)保存在DispSyncSource中 sp<VSyncSource> vsyncSrc = new DispSyncSource(&mPrimaryDispSync, vsyncPhaseOffsetNs, true, "app"); mEventThread = new EventThread(vsyncSrc); sp<VSyncSource> sfVsyncSrc = new DispSyncSource(&mPrimaryDispSync, sfVsyncPhaseOffsetNs, true, "sf"); mSFEventThread = new EventThread(sfVsyncSrc); mEventQueue.setEventThread(mSFEventThread); ...... } |

这个我们分布分析:

1)先看下DispSyncSource对象的建立,其实这个对象从名字上看就是模型所驱动的事件。我们看看它的构造函数,也位于SurfaceFlinger.cpp中:

|

1 2 3 4 5 6 7 8 |

DispSyncSource(DispSync* dispSync, nsecs_t phaseOffset, bool traceVsync, const char* label) : mValue(0), mPhaseOffset(phaseOffset), mTraceVsync(traceVsync), mVsyncOnLabel(String8::format("VsyncOn-%s", label)), mVsyncEventLabel(String8::format("VSYNC-%s", label)), mDispSync(dispSync) {} |

构造函数中主要设置了模型mDispSync(dispSync),以及触发时间的偏移量mPhaseOffset(phaseOffset)。这个实参vsyncPhaseOffsetNs和sfVsyncPhaseOffsetNs定义如下,也位于SurfaceFlinger.cpp中:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

// This is the phase offset in nanoseconds of the software vsync event // relative to the vsync event reported by HWComposer. The software vsync // event is when SurfaceFlinger and Choreographer-based applications run each // frame. // // This phase offset allows adjustment of the minimum latency from application // wake-up (by Choregographer) time to the time at which the resulting window // image is displayed. This value may be either positive (after the HW vsync) // or negative (before the HW vsync). Setting it to 0 will result in a // minimum latency of two vsync periods because the app and SurfaceFlinger // will run just after the HW vsync. Setting it to a positive number will // result in the minimum latency being: // // (2 * VSYNC_PERIOD - (vsyncPhaseOffsetNs % VSYNC_PERIOD)) // // Note that reducing this latency makes it more likely for the applications // to not have their window content image ready in time. When this happens // the latency will end up being an additional vsync period, and animations // will hiccup. Therefore, this latency should be tuned somewhat // conservatively (or at least with awareness of the trade-off being made). static const int64_t vsyncPhaseOffsetNs = VSYNC_EVENT_PHASE_OFFSET_NS; // This is the phase offset at which SurfaceFlinger's composition runs. static const int64_t sfVsyncPhaseOffsetNs = SF_VSYNC_EVENT_PHASE_OFFSET_NS; |

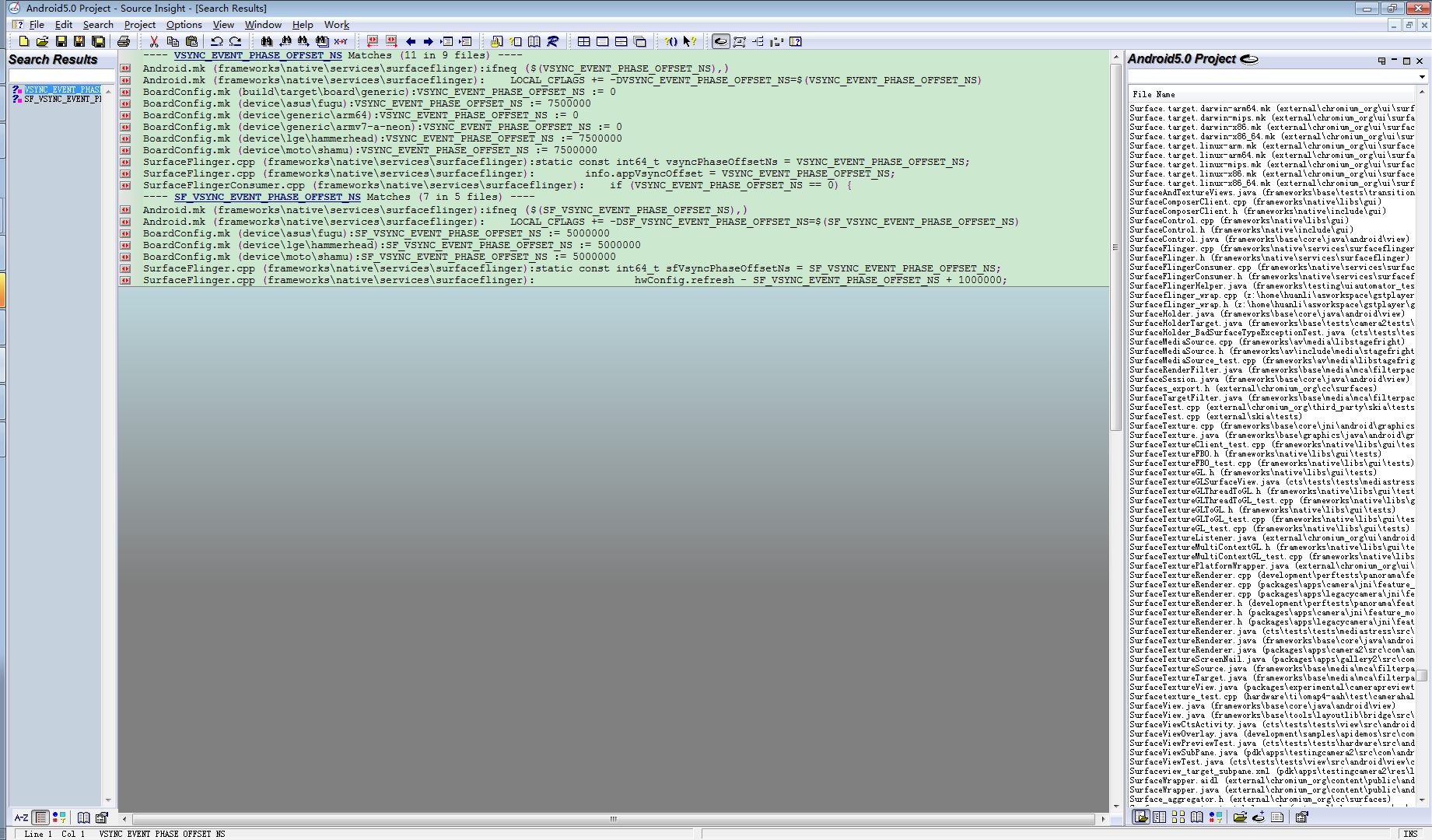

这个phase就是用来调节vsync信号latency 时间,其定义我也是搜了整个工程才找到如下定义:

generic版本中phase-app为0,phase-sf为5000000。(不知道对不对=。=)

2)我们继续往下看,mSFEventThread = new EventThread(sfVsyncSrc);先看看EventThread的构造函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

EventThread::EventThread(const sp<VSyncSource>& src) : mVSyncSource(src), mUseSoftwareVSync(false),//软vsync初始为false mVsyncEnabled(false),//vsync使能初始为false mDebugVsyncEnabled(false), mVsyncHintSent(false) { //i < 2,只遍历主屏幕和扩展屏幕 for (int32_t i=0 ; i < DisplayDevice::NUM_BUILTIN_DISPLAY_TYPES ; i++) { mVSyncEvent[i].header.type = DisplayEventReceiver::DISPLAY_EVENT_VSYNC; mVSyncEvent[i].header.id = 0; mVSyncEvent[i].header.timestamp = 0; mVSyncEvent[i].vsync.count = 0; } struct sigevent se; se.sigev_notify = SIGEV_THREAD; se.sigev_value.sival_ptr = this; se.sigev_notify_function = vsyncOffCallback; se.sigev_notify_attributes = NULL; timer_create(CLOCK_MONOTONIC, &se, &mTimerId); } |

EventThread是Thread的派生类,还是RefBase的间接派生类,所以我们需要产看它的onFirstRef函数:

|

1 2 3 |

void EventThread::onFirstRef() { run("EventThread", PRIORITY_URGENT_DISPLAY + PRIORITY_MORE_FAVORABLE); } |

将这个线程启动了起来,那么我们需要看下线程循环函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 |

bool EventThread::threadLoop() { DisplayEventReceiver::Event event; Vector< sp<EventThread::Connection> > signalConnections; //等待事件到来 signalConnections = waitForEvent(&event); // dispatch events to listeners... //把事件分发给listener const size_t count = signalConnections.size(); for (size_t i=0 ; i < count ; i++) { const sp<Connection>& conn(signalConnections[i]); // now see if we still need to report this event //然后调用每个连接的postEvent来发送Event status_t err = conn->postEvent(event); if (err == -EAGAIN || err == -EWOULDBLOCK) { // The destination doesn't accept events anymore, it's probably // full. For now, we just drop the events on the floor. // FIXME: Note that some events cannot be dropped and would have // to be re-sent later. // Right-now we don't have the ability to do this. ALOGW("EventThread: dropping event (%08x) for connection %p", event.header.type, conn.get()); } else if (err < 0) { // handle any other error on the pipe as fatal. the only // reasonable thing to do is to clean-up this connection. // The most common error we'll get here is -EPIPE. removeDisplayEventConnection(signalConnections[i]); } } return true; } |

这个线程函数里面主要做了三件事:

- 调用waitForEvent等待事件到来;

- 把事件分发给listener;

- 然后调用每个连接的postEvent来发送Event。

我们分部查看,先看看waitForEvent实现:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 |

// This will return when (1) a vsync event has been received, and (2) there was // at least one connection interested in receiving it when we started waiting. Vector< sp<EventThread::Connection> > EventThread::waitForEvent( DisplayEventReceiver::Event* event) { Mutex::Autolock _l(mLock); Vector< sp<EventThread::Connection> > signalConnections; do { bool eventPending = false; bool waitForVSync = false; size_t vsyncCount = 0; nsecs_t timestamp = 0; //上面初始化EventThread时候,都是0 for (int32_t i=0 ; i < DisplayDevice::NUM_BUILTIN_DISPLAY_TYPES ; i++) { timestamp = mVSyncEvent[i].header.timestamp; if (timestamp) { // we have a vsync event to dispatch *event = mVSyncEvent[i]; mVSyncEvent[i].header.timestamp = 0; vsyncCount = mVSyncEvent[i].vsync.count; break; } } if (!timestamp) {//0 // no vsync event, see if there are some other event eventPending = !mPendingEvents.isEmpty(); if (eventPending) {//初始时候为false // we have some other event to dispatch *event = mPendingEvents[0]; mPendingEvents.removeAt(0); } } // find out connections waiting for events //// 初始也是空的 SortedVector< wp<Connection> > mDisplayEventConnections; size_t count = mDisplayEventConnections.size(); for (size_t i=0 ; i < count ; i++) { sp<Connection> connection(mDisplayEventConnections[i].promote()); if (connection != NULL) { bool added = false; if (connection->count >= 0) { // we need vsync events because at least // one connection is waiting for it waitForVSync = true; if (timestamp) { // we consume the event only if it's time // (ie: we received a vsync event) if (connection->count == 0) { // fired this time around connection->count = -1; signalConnections.add(connection); added = true; } else if (connection->count == 1 || (vsyncCount % connection->count) == 0) { // continuous event, and time to report it signalConnections.add(connection); added = true; } } } if (eventPending && !timestamp && !added) { // we don't have a vsync event to process // (timestamp==0), but we have some pending // messages. signalConnections.add(connection); } } else { // we couldn't promote this reference, the connection has // died, so clean-up! mDisplayEventConnections.removeAt(i); --i; --count; } } // Here we figure out if we need to enable or disable vsyncs if (timestamp && !waitForVSync) { // we received a VSYNC but we have no clients // don't report it, and disable VSYNC events disableVSyncLocked(); } else if (!timestamp && waitForVSync) { // we have at least one client, so we want vsync enabled // (TODO: this function is called right after we finish // notifying clients of a vsync, so this call will be made // at the vsync rate, e.g. 60fps. If we can accurately // track the current state we could avoid making this call // so often.) enableVSyncLocked(); } // note: !timestamp implies signalConnections.isEmpty(), because we // don't populate signalConnections if there's no vsync pending if (!timestamp && !eventPending) { // wait for something to happen if (waitForVSync) { // This is where we spend most of our time, waiting // for vsync events and new client registrations. // // If the screen is off, we can't use h/w vsync, so we // use a 16ms timeout instead. It doesn't need to be // precise, we just need to keep feeding our clients. // // We don't want to stall if there's a driver bug, so we // use a (long) timeout when waiting for h/w vsync, and // generate fake events when necessary. bool softwareSync = mUseSoftwareVSync; nsecs_t timeout = softwareSync ? ms2ns(16) : ms2ns(1000); if (mCondition.waitRelative(mLock, timeout) == TIMED_OUT) { if (!softwareSync) { ALOGW("Timed out waiting for hw vsync; faking it"); } // FIXME: how do we decide which display id the fake // vsync came from ? mVSyncEvent[0].header.type = DisplayEventReceiver::DISPLAY_EVENT_VSYNC; mVSyncEvent[0].header.id = DisplayDevice::DISPLAY_PRIMARY; mVSyncEvent[0].header.timestamp = systemTime(SYSTEM_TIME_MONOTONIC); mVSyncEvent[0].vsync.count++; } } else { // Nobody is interested in vsync, so we just want to sleep. // h/w vsync should be disabled, so this will wait until we // get a new connection, or an existing connection becomes // interested in receiving vsync again. //所以EventThread初始的时候会在这阻塞 mCondition.wait(mLock); } } } while (signalConnections.isEmpty()); //因为mCondition有可能异常返回,所以要看下这个while,就知道何时mCondition被正常的signal //如果signalConnections不为空了,这时候就会从while中退出来 //也就是上面的mDisplayEventConnections有东西了 // here we're guaranteed to have a timestamp and some connections to signal // (The connections might have dropped out of mDisplayEventConnections // while we were asleep, but we'll still have strong references to them.) return signalConnections; } |

waitForEvent函数中下面代码段,timestamp为0表示没有时间,waitForSync为true表示至少有一个客户和EventThread建立了连接。这段代码一旦有客户连接,就调用enableVSyncLocked接收DispSyncSource的VSync信号。如果在接受信号中,所有客户都断开了连接,则调用disableVSyncLocked函数停止接受DispSyncSource对象的信号。

虽然代码量有些多,但是我们是初始化时候,所以省去了很多判断逻辑。因此主要工作就不多了,主要如下:

1. 初始的时候,mCondition会阻塞;

2. 因为mCondition有可能异常返回,所以要看下外围的while循环,就知道何时mCondition会被signal。即使mCondition异常返回,也会再去判断signalConnections是否为空。空的话继续阻塞,如果signalConnections不为空了,这时候就会从while中退出来,也就是上面的mDisplayEventConnections有东西了。所以mDisplayEventConnections需要留意何时赋值啦。

至此,创建了一个mSFEventThread = new EventThread(sfVsyncSrc);,也阻塞着了。。

至于下面的 2. 把事件分发给listener;3. 然后调用每个连接的postEvent来发送Event。这两个步骤我们下面会讲到,这里先不看它。

3)从上面得知在DsipSync里面创建的DispSyncThread线程阻塞等待着;还有上面的EventThread线程,也阻塞等待了。

下面我们继续看mEventQueue.setEventThread(mSFEventThread);会不会解除阻塞。这里EventThread和SF主线程的MessageQueue又纠缠到了一起:

|

1 2 3 4 5 6 7 8 9 10 11 |

void MessageQueue::setEventThread(const sp<EventThread>& eventThread) { mEventThread = eventThread; //SF与EventThread建立连接 mEvents = eventThread->createEventConnection(); //创建通信通道 mEventTube = mEvents->getDataChannel(); //Looper连接通道,进行消息循环 mLooper->addFd(mEventTube->getFd(), 0, Looper::EVENT_INPUT, MessageQueue::cb_eventReceiver, this); } |

我们依然分部查看:

1. 首先, eventThread->createEventConnection(),新建了一个Connection对象:

|

1 2 3 |

sp<EventThread::Connection> EventThread::createEventConnection() const { return new Connection(const_cast<EventThread*>(this)); } |

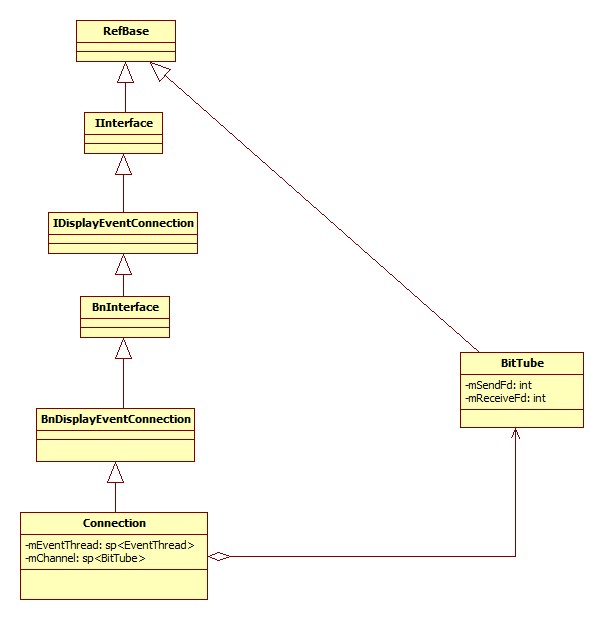

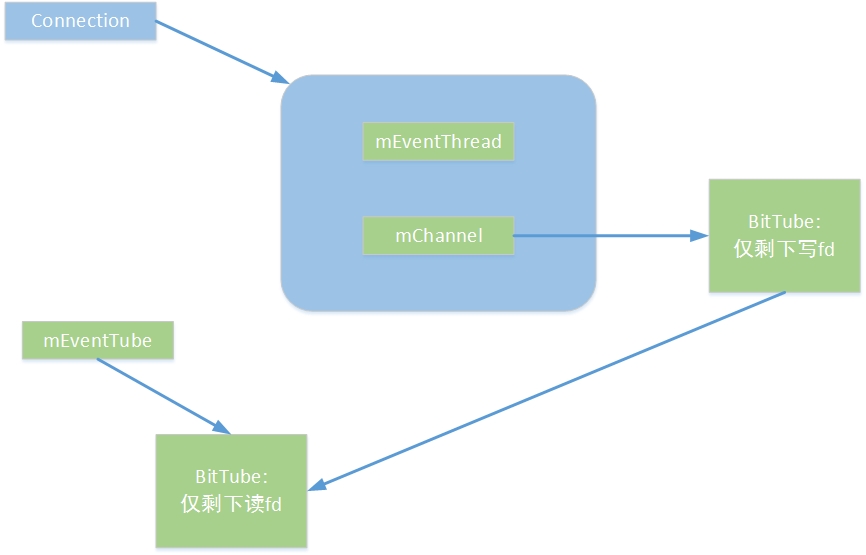

Connection对象如下图所示:

Connection中保存了了一个EventThread对象,和一个生成的BitTube对象mChannel,下面看下Connection的构造函数:

|

1 2 3 4 5 |

EventThread::Connection::Connection( const sp<EventThread>& eventThread) : count(-1), mEventThread(eventThread), mChannel(new BitTube()) { } |

调用了BitTube的无参构造函数:

|

1 2 3 4 5 |

BitTube::BitTube() : mSendFd(-1), mReceiveFd(-1) { init(DEFAULT_SOCKET_BUFFER_SIZE, DEFAULT_SOCKET_BUFFER_SIZE); } |

构造函数里调用了init函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

void BitTube::init(size_t rcvbuf, size_t sndbuf) { int sockets[2]; if (socketpair(AF_UNIX, SOCK_SEQPACKET, 0, sockets) == 0) { size_t size = DEFAULT_SOCKET_BUFFER_SIZE; setsockopt(sockets[0], SOL_SOCKET, SO_RCVBUF, &rcvbuf, sizeof(rcvbuf)); setsockopt(sockets[1], SOL_SOCKET, SO_SNDBUF, &sndbuf, sizeof(sndbuf)); // sine we don't use the "return channel", we keep it small... setsockopt(sockets[0], SOL_SOCKET, SO_SNDBUF, &size, sizeof(size)); setsockopt(sockets[1], SOL_SOCKET, SO_RCVBUF, &size, sizeof(size)); fcntl(sockets[0], F_SETFL, O_NONBLOCK); fcntl(sockets[1], F_SETFL, O_NONBLOCK); mReceiveFd = sockets[0]; mSendFd = sockets[1]; } else { mReceiveFd = -errno; ALOGE("BitTube: pipe creation failed (%s)", strerror(-mReceiveFd)); } } |

建立一个域套接字,用fcntl设置成非阻塞模式,然后两个fd,一个读一个写,mSendFd和mReceiveFd。

我们上面创建一个Connection,但是又是一个sp指针,所以就得看看它的onFirstRef函数:

|

1 2 3 4 |

void EventThread::Connection::onFirstRef() { // NOTE: mEventThread doesn't hold a strong reference on us mEventThread->registerDisplayEventConnection(this); } |

这里又调用EventThread的registerDisplayEventConnection函数,我们继续查看:

|

1 2 3 4 5 6 7 8 9 |

status_t EventThread::registerDisplayEventConnection( const sp<EventThread::Connection>& connection) { Mutex::Autolock _l(mLock); //把connection添加到mDisplayEventConnections mDisplayEventConnections.add(connection); //mCondition解除 mCondition.broadcast(); return NO_ERROR; } |

前面提到,EventThread一直阻塞在waitForEvent中,正是这个mCondition,这里也对mDisplayEventConnections添加了东西,不为空了。

为了方便分析,我们再把前面的waitForEvent函数列出来,这次就是另一套逻辑了:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 |

// This will return when (1) a vsync event has been received, and (2) there was // at least one connection interested in receiving it when we started waiting. Vector< sp<EventThread::Connection> > EventThread::waitForEvent( DisplayEventReceiver::Event* event) { Mutex::Autolock _l(mLock); Vector< sp<EventThread::Connection> > signalConnections; do { bool eventPending = false; bool waitForVSync = false; size_t vsyncCount = 0; nsecs_t timestamp = 0; for (int32_t i=0 ; i < DisplayDevice::NUM_BUILTIN_DISPLAY_TYPES ; i++) { timestamp = mVSyncEvent[i].header.timestamp; if (timestamp) { // we have a vsync event to dispatch *event = mVSyncEvent[i]; mVSyncEvent[i].header.timestamp = 0; vsyncCount = mVSyncEvent[i].vsync.count; break; } } if (!timestamp) {//这次这里还是0 // no vsync event, see if there are some other event eventPending = !mPendingEvents.isEmpty(); if (eventPending) { // we have some other event to dispatch *event = mPendingEvents[0]; mPendingEvents.removeAt(0); } } // find out connections waiting for events //有东西了,就是保存的Connection size_t count = mDisplayEventConnections.size(); for (size_t i=0 ; i < count ; i++) { sp<Connection> connection(mDisplayEventConnections[i].promote()); if (connection != NULL) { bool added = false; if (connection->count >= 0) { // we need vsync events because at least // one connection is waiting for it waitForVSync = true; if (timestamp) { // we consume the event only if it's time // (ie: we received a vsync event) if (connection->count == 0) { // fired this time around connection->count = -1; signalConnections.add(connection); added = true; } else if (connection->count == 1 || (vsyncCount % connection->count) == 0) { // continuous event, and time to report it signalConnections.add(connection); added = true; } } } if (eventPending && !timestamp && !added) { // we don't have a vsync event to process // (timestamp==0), but we have some pending // messages. signalConnections.add(connection); } } else { // we couldn't promote this reference, the connection has // died, so clean-up! mDisplayEventConnections.removeAt(i); --i; --count; } } // Here we figure out if we need to enable or disable vsyncs if (timestamp && !waitForVSync) {//0 true // we received a VSYNC but we have no clients // don't report it, and disable VSYNC events disableVSyncLocked(); } else if (!timestamp && waitForVSync) {//所以走到了这里 // we have at least one client, so we want vsync enabled // (TODO: this function is called right after we finish // notifying clients of a vsync, so this call will be made // at the vsync rate, e.g. 60fps. If we can accurately // track the current state we could avoid making this call // so often.) //这次走到了这里 enableVSyncLocked(); } // note: !timestamp implies signalConnections.isEmpty(), because we // don't populate signalConnections if there's no vsync pending if (!timestamp && !eventPending) {//0 false // wait for something to happen if (waitForVSync) {//true // This is where we spend most of our time, waiting // for vsync events and new client registrations. // // If the screen is off, we can't use h/w vsync, so we // use a 16ms timeout instead. It doesn't need to be // precise, we just need to keep feeding our clients. // // We don't want to stall if there's a driver bug, so we // use a (long) timeout when waiting for h/w vsync, and // generate fake events when necessary. bool softwareSync = mUseSoftwareVSync; nsecs_t timeout = softwareSync ? ms2ns(16) : ms2ns(1000); if (mCondition.waitRelative(mLock, timeout) == TIMED_OUT) { if (!softwareSync) { ALOGW("Timed out waiting for hw vsync; faking it"); } // FIXME: how do we decide which display id the fake // vsync came from ? mVSyncEvent[0].header.type = DisplayEventReceiver::DISPLAY_EVENT_VSYNC; mVSyncEvent[0].header.id = DisplayDevice::DISPLAY_PRIMARY; mVSyncEvent[0].header.timestamp = systemTime(SYSTEM_TIME_MONOTONIC); mVSyncEvent[0].vsync.count++; } } else { // Nobody is interested in vsync, so we just want to sleep. // h/w vsync should be disabled, so this will wait until we // get a new connection, or an existing connection becomes // interested in receiving vsync again. mCondition.wait(mLock);//这个之前的wait已经解开了 } } } while (signalConnections.isEmpty()); // here we're guaranteed to have a timestamp and some connections to signal // (The connections might have dropped out of mDisplayEventConnections // while we were asleep, but we'll still have strong references to them.) return signalConnections; } |

所以在waitEvent里,这次的逻辑和上次不同了,主要是一下几点:

- 建立Connection后,mCondition返回了;

- 这里timestamp 还是0 ;

- mDisplayEventConnections非空了,将waitForVSync = true;

- 所以会执行到enableVSyncLocked();。

那么我们看看enableVSyncLocked函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

void EventThread::enableVSyncLocked() { if (!mUseSoftwareVSync) {//false // never enable h/w VSYNC when screen is off if (!mVsyncEnabled) {//false mVsyncEnabled = true;//将mVsyncEnabled 置为true //DispSyncSource的setCallback,把mCallback设置为EventThread mVSyncSource->setCallback(static_cast<VSyncSource::Callback*>(this)); //调用DispSyncSource的setVSyncEnabled mVSyncSource->setVSyncEnabled(true); } } mDebugVsyncEnabled = true; sendVsyncHintOnLocked(); } |

这个也分两步:

①将mVsyncEnabled 设为true,调用mVSyncSource->setCallback(static_cast(this));,this为EventThread,既是调用DispSyncSource的setCallback,把mCallback设置为EventThread:

|

1 2 3 4 |

virtual void setCallback(const sp<VSyncSource::Callback>& callback) { Mutex::Autolock lock(mMutex); mCallback = callback; } |

②调用mVSyncSource->setVSyncEnabled(true);,既是调用DispSyncSource的setVSyncEnabled:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

virtual void setVSyncEnabled(bool enable) {//true // Do NOT lock the mutex here so as to avoid any mutex ordering issues // with locking it in the onDispSyncEvent callback. if (enable) { ////在硬件模型mDispSync中添加addEventListener,一个参数为偏移量,一个为DispSyncSource status_t err = mDispSync->addEventListener(mPhaseOffset, static_cast<DispSync::Callback*>(this)); if (err != NO_ERROR) { ALOGE("error registering vsync callback: %s (%d)", strerror(-err), err); } //ATRACE_INT(mVsyncOnLabel.string(), 1); } else { status_t err = mDispSync->removeEventListener( static_cast<DispSync::Callback*>(this)); if (err != NO_ERROR) { ALOGE("error unregistering vsync callback: %s (%d)", strerror(-err), err); } //ATRACE_INT(mVsyncOnLabel.string(), 0); } } |

从上面的代码可以看出,这里是将驱动事件DispSyncSource和硬件模型mDispSync建立起关系。既然调用了DispSync的addEventListener,那么我们就继续查看:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

//DispSync的addEventListener status_t DispSync::addEventListener(nsecs_t phase, const sp<Callback>& callback) { Mutex::Autolock lock(mMutex); return mThread->addEventListener(phase, callback); } //DispSyncThread的addEventListener status_t addEventListener(nsecs_t phase, const sp<DispSync::Callback>& callback) { Mutex::Autolock lock(mMutex); for (size_t i = 0; i < mEventListeners.size(); i++) { if (mEventListeners[i].mCallback == callback) { return BAD_VALUE; } } EventListener listener; listener.mPhase = phase; listener.mCallback = callback; // We want to allow the firstmost future event to fire without // allowing any past events to fire. Because // computeListenerNextEventTimeLocked filters out events within a half // a period of the last event time, we need to initialize the last // event time to a half a period in the past. listener.mLastEventTime = systemTime(SYSTEM_TIME_MONOTONIC) - mPeriod / 2; ////把listener放到mEventListeners中 mEventListeners.push(listener); ////释放mCond mCond.signal(); return NO_ERROR; } |

这里可以看出,驱动事件DispSyncSource是硬件模型DispSync的“listener”,监听者,把两者联系了起来。并把DispSyncThread线程中的阻塞mCond解除,但是,前面我们分析过,还要mPeriod 非0。我们可以回顾一下上面的代码片段,DispSyncThread的threadLoop函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

virtual bool threadLoop() { ...... while(true){ ...... if (mPeriod == 0) { err = mCond.wait(mMutex); if (err != NO_ERROR) { ALOGE("error waiting for new events: %s (%d)", strerror(-err), err); return false; } continue; } ...... } ...... } |

那么哪里给mPeriod 赋值呢?我们上面讲EventControlThread闸刀控制线程的时候,initializeDisplays()函数会被调用,最终会调用resyncToHardwareVsync函数,这个里面会获取mPeriod屏幕刷新率,然后给mPeriod 赋值:

|

1 2 3 4 5 6 7 8 9 |

void SurfaceFlinger::resyncToHardwareVsync(bool makeAvailable) { ...... const nsecs_t period = getHwComposer().getRefreshPeriod(HWC_DISPLAY_PRIMARY); mPrimaryDispSync.reset(); mPrimaryDispSync.setPeriod(period); ...... } |

然后调用DispSync的setPeriod函数:

|

1 2 3 4 5 6 7 |

void DispSync::setPeriod(nsecs_t period) { Mutex::Autolock lock(mMutex); mPeriod = period;//mPeriod 赋值后,已经不为0 mPhase = 0; //调用线程的更新模型函数 mThread->updateModel(mPeriod, mPhase); } |

mPeriod 赋值后,已经不为0;然后调用线程的更新模型函数updateModel:

|

1 2 3 4 5 6 7 |

void updateModel(nsecs_t period, nsecs_t phase) { Mutex::Autolock lock(mMutex); mPeriod = period; mPhase = phase; //mCond阻塞解除 mCond.signal(); } |

至此,DispSync中设置了监听者DispSyncSource,mPeriod 也不为0,硬件模型线程不再阻塞,不阻塞就会去处理vsync事件,这个我们后面会讲。

经过调用mEvents = eventThread->createEventConnection();完成了一下几个功能:

- MessageQueue中保存了一个Connection,mEvents ;

- EventThread中保存了这个Connection,mDisplayEventConnections.add(connection);

- mVSyncSource->setCallback 把mCallback = callback 设置为EventThread;

- 在mDispSync中注册listener, 放到DispSyncthread的mEventListeners中,这个listener的callback就是mVSyncSource。

2. 然后就是创建通信通道。接着继续MessageQueue::setEventThread()函数,调用mEventTube = mEvents->getDataChannel()。

mEvents类型为sp< IDisplayEventConnection > ;在上一步mEvents = eventThread->createEventConnection();返回的直接是Connection对象。Connection继承于BnDisplayEventConnection,BnDisplayEventConnection继承于BnInterface< IDisplayEventConnection >。因此我们找到bp端的getDataChannel函数,位于BpDisplayEventConnection类当中,继承于BpInterface< IDisplayEventConnection >位于frameworks/native/libs/gui/IDisplayEventConnection.cpp:

|

1 2 3 4 5 6 7 |

virtual sp<BitTube> getDataChannel() const { Parcel data, reply; data.writeInterfaceToken(IDisplayEventConnection::getInterfaceDescriptor()); remote()->transact(GET_DATA_CHANNEL, data, &reply); return new BitTube(reply); } |

相应的bn端位onTransact方法于也位于IDisplayEventConnection.cpp:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

status_t BnDisplayEventConnection::onTransact( uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags) { switch(code) { case GET_DATA_CHANNEL: { CHECK_INTERFACE(IDisplayEventConnection, data, reply); sp<BitTube> channel(getDataChannel());//这个getDataChannel是Connection类的函数 channel->writeToParcel(reply); return NO_ERROR; } break; ...... } return BBinder::onTransact(code, data, reply, flags); } |

上面那个getDataChannel是Connection类的函数,我们看看Connection的getDataChannel函数:

|

1 2 3 |

sp<BitTube> EventThread::Connection::getDataChannel() const { return mChannel; } |

mChannel就是我们在构造函数里面的new BitTube()。

这样mEventTube 中只包含了读fd,而mEvents这个connection中的mChannel只剩下写fd,两个依然是一对读写,但是分开了,如下图所示:

3. 继续调用,这里就是把mEventTube这个读tube注册到SF主线程的Looper中去,回调函数为MessageQueue::cb_eventReceiver:

|

1 2 |

mLooper->addFd(mEventTube->getFd(), 0, ALOOPER_EVENT_INPUT, MessageQueue::cb_eventReceiver, this); |

这里用到了Android消息处理零散分析的内容,如果忘记了,可以翻一翻。

因为注册了回调事件,因此我们需要查看MessageQueue::cb_eventReceiver函数:

|

1 2 3 4 |

int MessageQueue::cb_eventReceiver(int fd, int events, void* data) { MessageQueue* queue = reinterpret_cast<MessageQueue *>(data); return queue->eventReceiver(fd, events); } |

然后是eventReceiver函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

int MessageQueue::eventReceiver(int /*fd*/, int /*events*/) { ssize_t n; DisplayEventReceiver::Event buffer[8]; while ((n = DisplayEventReceiver::getEvents(mEventTube, buffer, 8)) > 0) { for (int i=0 ; i < n ; i++) { if (buffer[i].header.type == DisplayEventReceiver::DISPLAY_EVENT_VSYNC) { #if INVALIDATE_ON_VSYNC //1 mHandler->dispatchInvalidate(); #else mHandler->dispatchRefresh(); #endif break; } } } return 1; } |

这里会从MessageQueue的读BitTube中读出event,然后调用mHandler->dispatchInvalidate():

|

1 2 3 4 5 |

void MessageQueue::Handler::dispatchInvalidate() { if ((android_atomic_or(eventMaskInvalidate, &mEventMask) & eventMaskInvalidate) == 0) { mQueue.mLooper->sendMessage(this, Message(MessageQueue::INVALIDATE)); } } |

接收消息就是handleMessage函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

void MessageQueue::Handler::handleMessage(const Message& message) { switch (message.what) { case INVALIDATE: android_atomic_and(~eventMaskInvalidate, &mEventMask); mQueue.mFlinger->onMessageReceived(message.what); break; case REFRESH: android_atomic_and(~eventMaskRefresh, &mEventMask); mQueue.mFlinger->onMessageReceived(message.what); break; case TRANSACTION: android_atomic_and(~eventMaskTransaction, &mEventMask); mQueue.mFlinger->onMessageReceived(message.what); break; } } |

进而去调用SF的onMessageReceived函数,最终每次Vsync信号来了,SF都会去执行handleMessageTransaction()等函数。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

void SurfaceFlinger::onMessageReceived(int32_t what) { ATRACE_CALL(); switch (what) { case MessageQueue::TRANSACTION: handleMessageTransaction(); break; case MessageQueue::INVALIDATE: handleMessageTransaction(); handleMessageInvalidate(); signalRefresh(); break; case MessageQueue::REFRESH: handleMessageRefresh(); break; } } |

至此我们对于vsync消息的准备逻辑工作都做完了。但是细心的读者应该发现了,我们还没有按正常流程处理一遍vsync信号,就是当vsync产生后,SurfaceFlinger的回调函数onVSyncReceived处理过程。接下来我们就分析一下这个过程。

VSync信号处理

矫正VSync时间

我们再次借助上面那幅Vsync event产生的示意图:

这里HW_VSYNC就是HW_VSYNC_0。

当SF从HWComposer收到VSYNC(HW_VSYNC_0)时,会调用onVSyncReceived函数处理:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

void SurfaceFlinger::onVSyncReceived(int type, nsecs_t timestamp) { bool needsHwVsync = false; { // Scope for the lock Mutex::Autolock _l(mHWVsyncLock); if (type == 0 && mPrimaryHWVsyncEnabled) {//将新的VSYNC时间交给DispSync needsHwVsync = mPrimaryDispSync.addResyncSample(timestamp); } } if (needsHwVsync) {//是否还需要vsync enableHardwareVsync(); } else { disableHardwareVsync(false); } } |

它会利用DispSync::addResyncSample将新的VSYNC时间交给DispSync。addResyncSample决定是否还需要HW_VSYNC的输入,如果不需要,就会将HW_VSYNC关掉。

然后我们继续查看DispSync::addResyncSample:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

bool DispSync::addResyncSample(nsecs_t timestamp) { Mutex::Autolock lock(mMutex); //MAX_RESYNC_SAMPLES = 32 size_t idx = (mFirstResyncSample + mNumResyncSamples) % MAX_RESYNC_SAMPLES; mResyncSamples[idx] = timestamp; if (mNumResyncSamples < MAX_RESYNC_SAMPLES) { mNumResyncSamples++; } else { mFirstResyncSample = (mFirstResyncSample + 1) % MAX_RESYNC_SAMPLES; } //这个函数很重要,用来计算SW_VSYNC的值,直到误差小于threshold updateModelLocked(); //MAX_RESYNC_SAMPLES_WITHOUT_PRESENT = 12 if (mNumResyncSamplesSincePresent++ > MAX_RESYNC_SAMPLES_WITHOUT_PRESENT) { resetErrorLocked(); } if (kIgnorePresentFences) { // If we don't have the sync framework we will never have // addPresentFence called. This means we have no way to know whether // or not we're synchronized with the HW vsyncs, so we just request // that the HW vsync events be turned on whenever we need to generate // SW vsync events. return mThread->hasAnyEventListeners(); } return mPeriod == 0 || mError > kErrorThreshold; } |

DispSync是利用HW_VSYNC和PresentFence来判断是否需要开启HW_VSYNC。HW_VSYNC最少要3个,最多是32个,实际上要用几个则不一定,DispSync拿到3个HW_VSYNC后就会计算出SW_VSYNC,只要收到的PresentFence没有超过误差,则HW_VSYNC就会关掉,以便节省功耗.不然会继续开启HW_VSYNC计算SW_VSYNC的值,直到误差小于threshold。其计算的方法是DispSync::updateModelLocked():

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 |

void DispSync::updateModelLocked() { if (mNumResyncSamples >= MIN_RESYNC_SAMPLES_FOR_UPDATE) { nsecs_t durationSum = 0; for (size_t i = 1; i < mNumResyncSamples; i++) { size_t idx = (mFirstResyncSample + i) % MAX_RESYNC_SAMPLES; size_t prev = (idx + MAX_RESYNC_SAMPLES - 1) % MAX_RESYNC_SAMPLES; durationSum += mResyncSamples[idx] - mResyncSamples[prev]; } mPeriod = durationSum / (mNumResyncSamples - 1); double sampleAvgX = 0; double sampleAvgY = 0; double scale = 2.0 * M_PI / double(mPeriod); for (size_t i = 0; i < mNumResyncSamples; i++) { size_t idx = (mFirstResyncSample + i) % MAX_RESYNC_SAMPLES; nsecs_t sample = mResyncSamples[idx]; double samplePhase = double(sample % mPeriod) * scale; sampleAvgX += cos(samplePhase); sampleAvgY += sin(samplePhase); } sampleAvgX /= double(mNumResyncSamples); sampleAvgY /= double(mNumResyncSamples); mPhase = nsecs_t(atan2(sampleAvgY, sampleAvgX) / scale); if (mPhase < 0) { mPhase += mPeriod; } if (kTraceDetailedInfo) { ATRACE_INT64("DispSync:Period", mPeriod); ATRACE_INT64("DispSync:Phase", mPhase); } // Artificially inflate the period if requested. mPeriod += mPeriod * mRefreshSkipCount; mThread->updateModel(mPeriod, mPhase); } } |

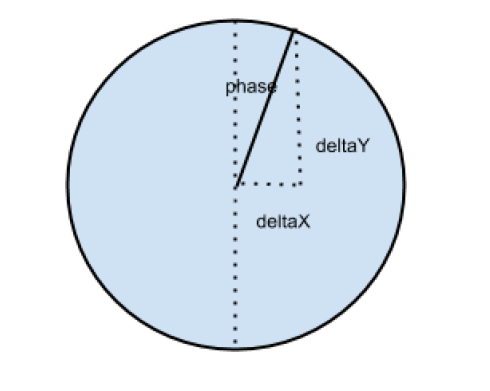

基本思想如下:

- 计算目前收到HW_VSYNC间隔,取平均值(AvgPeriod) HW_VSYNC;

- 将每个收到的VSYNC时间与AvgPeriod算出误差. (Delta = Time %AvgPeriod);

- 将Delta转换成角度(DeltaPhase),如果AvgPeriod是360度,DeltaPhase = 2PIDelta/AvgPeriod;

- 从DeltaPhase可以得到DeltaX与DeltaY (DeltaX =cos(DeltaPhase), DeltaY = sin(DeltaPhase));

- 将每个收到的VSYNC的DeltaX与DeltaY取平均,可以得到AvgX与AvgY;

- 利用atan与AvgX, AvgY可以得到平圴的phase (AvgPhase);

- AvgPeriod + AvgPhase就是SW_VSYNC。

当DispSync收到addPresentFence时(最多记录8个sample),每一个fence的时间算出(Time% AvgPeriod)的平方当作误差,将所有的Fence误差加总起来如果大于某个Threshold,就表示需要校正(DispSync::updateErrorLocked)。校正的方法是呼叫DispSync::beginResync()将所有的HW_VSYNC清掉,开启HW_VSYNC。等至少3个HW_VSYNC再重新计算。

处理VSync消息

消息处理流程依然需要分步骤:

1)我们继续回到DispSync的threadLoop函数中,回顾上面的内容,因为在这里我们需要搜集vsync触发事件:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

virtual bool threadLoop() { ...... while (true) { Vector<CallbackInvocation> callbackInvocations; ...... callbackInvocations = gatherCallbackInvocationsLocked(now); } if (callbackInvocations.size() > 0) { fireCallbackInvocations(callbackInvocations); } } ...... } |

mPeriod 不为0,也有signal,DispSyncThread线程不阻塞了,执行gatherCallbackInvocationsLocked(now)和fireCallbackInvocations(callbackInvocations)。我们先查看gatherCallbackInvocationsLocked函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

Vector<CallbackInvocation> gatherCallbackInvocationsLocked(nsecs_t now) { Vector<CallbackInvocation> callbackInvocations; nsecs_t ref = now - mPeriod; //这里的mEventListeners[i].mCallback都是驱动的事件DispSyncSource for (size_t i = 0; i < mEventListeners.size(); i++) { nsecs_t t = computeListenerNextEventTimeLocked(mEventListeners[i], ref); if (t < now) { CallbackInvocation ci; ci.mCallback = mEventListeners[i].mCallback; ci.mEventTime = t; callbackInvocations.push(ci); mEventListeners.editItemAt(i).mLastEventTime = t; } } return callbackInvocations; } |

然后发送事件,fireCallbackInvocations函数:

|

1 2 3 4 5 6 |

void fireCallbackInvocations(const Vector<CallbackInvocation>& callbacks) { for (size_t i = 0; i < callbacks.size(); i++) { //这里的callbacks[i].mCallback,就是驱动的事件DispSyncSource callbacks[i].mCallback->onDispSyncEvent(callbacks[i].mEventTime); } } |

这里会回调DispSyncSource的onDispSyncEvent函数:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

virtual void onDispSyncEvent(nsecs_t when) { sp<VSyncSource::Callback> callback; { Mutex::Autolock lock(mMutex); callback = mCallback; if (mTraceVsync) { mValue = (mValue + 1) % 2; ATRACE_INT(mVsyncEventLabel.string(), mValue); } } ////这里的callback为EventThread if (callback != NULL) { callback->onVSyncEvent(when); } } |

继续调用,这里已经从驱动事件,转化到驱动事件的线程EventThread中,填充EventThread的mVSyncEvent,因此我们查看onVSyncEvent函数:

|

1 2 3 4 5 6 7 8 |

void EventThread::onVSyncEvent(nsecs_t timestamp) { Mutex::Autolock _l(mLock); mVSyncEvent[0].header.type = DisplayEventReceiver::DISPLAY_EVENT_VSYNC; mVSyncEvent[0].header.id = 0; mVSyncEvent[0].header.timestamp = timestamp; mVSyncEvent[0].vsync.count++; mCondition.broadcast(); } |

这里将mVSyncEvent事件相关属性复制,且threadLoop等待阻塞唤醒,以便往下继续执行。

2)EventThread的waitForEvent(),返回signalConnections,就是开始建立的Connection,这个Connection里面有个BitTube的写fd,另外的读fd在MessageQueue中。

此时我们回到上面没有讲的,当waitForEvent返回后,2. 把事件分发给listener;3. 然后调用每个连接的postEvent来发送Event。这两个步骤。

waitForEvent返回,调用conn->postEvent(event),我们查看Connection的postEvent函数:

|

1 2 3 4 5 6 7 8 9 10 11 |

status_t EventThread::Connection::postEvent( const DisplayEventReceiver::Event& event) { ssize_t size = DisplayEventReceiver::sendEvents(mChannel, &event, 1); return size < 0 ? status_t(size) : status_t(NO_ERROR); } //位于frameworks/native/libs/gui/DisplayEventReceiver.cpp中 ssize_t DisplayEventReceiver::sendEvents(const sp<BitTube>& dataChannel, Event const* events, size_t count) { return BitTube::sendObjects(dataChannel, events, count); } |

也就是通过Connection的写fd将event发送给MessageQueue。