前言

Binder驱动是Android专用的,但是其在Linux底层中的框架和Linux驱动是一样的。Binder驱动是以Misc设备进行注册,binder驱动是一个虚拟的设备驱动,没有和任何的实际硬件相关联,其主要的作用就是进行设备内存的管理。这篇主要看一下Linux下Binder驱动的代码。

Binder驱动代码分析

既然Binder驱动也是一个Linux驱动,那么必然是按照Linux驱动的规则编写的,所以我们先来查看一下init函数的代码。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

static int __init binder_init(void) { int ret; // 创建名为binder的工作队列 binder_deferred_workqueue = create_singlethread_workqueue("binder"); if (!binder_deferred_workqueue) return -ENOMEM; // 创建debugfs相关节点 binder_debugfs_dir_entry_root = debugfs_create_dir("binder", NULL); if (binder_debugfs_dir_entry_root) binder_debugfs_dir_entry_proc = debugfs_create_dir("proc", binder_debugfs_dir_entry_root); // 注册Binder为杂项设备驱动 ret = misc_register(&binder_miscdev); if (binder_debugfs_dir_entry_root) { debugfs_create_file("state", S_IRUGO, binder_debugfs_dir_entry_root, NULL, &binder_state_fops); debugfs_create_file("stats", S_IRUGO, binder_debugfs_dir_entry_root, NULL, &binder_stats_fops); debugfs_create_file("transactions", S_IRUGO, binder_debugfs_dir_entry_root, NULL, &binder_transactions_fops); debugfs_create_file("transaction_log", S_IRUGO, binder_debugfs_dir_entry_root, &binder_transaction_log, &binder_transaction_log_fops); debugfs_create_file("failed_transaction_log", S_IRUGO, binder_debugfs_dir_entry_root, &binder_transaction_log_failed, &binder_transaction_log_fops); #ifdef BINDER_MONITOR /* system_server is the main writer, remember to * change group as "system" for write permission * via related init.rc */ debugfs_create_file("transaction_log_enable", (S_IRUGO | S_IWUSR | S_IWGRP), binder_debugfs_dir_entry_root, NULL, &binder_transaction_log_enable_fops); debugfs_create_file("log_level", (S_IRUGO | S_IWUSR | S_IWGRP), binder_debugfs_dir_entry_root, NULL, &binder_log_level_fops); debugfs_create_file("timeout_log", S_IRUGO, binder_debugfs_dir_entry_root, &binder_timeout_log_t, &binder_timeout_log_fops); #endif } return ret; } |

在misc_register(&binder_miscdev)中将binder驱动注册为杂项设备驱动,下面看看binder_miscdev结构体相关信息

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

static const struct file_operations binder_fops = { .owner = THIS_MODULE, .poll = binder_poll, .unlocked_ioctl = binder_ioctl, .compat_ioctl = binder_ioctl, .mmap = binder_mmap, .open = binder_open, .flush = binder_flush, .release = binder_release, }; static struct miscdevice binder_miscdev = { .minor = MISC_DYNAMIC_MINOR, // 动态分配次设备号 .name = "binder", // 驱动的名称 .fops = &binder_fops // 设备的文件操作结构,即驱动中的file_operations结构 }; |

上面分析了设备的注册入口init函数,接下来分析binder中file_operations结构体内的函数。

binder_open函数

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

static int binder_open(struct inode *nodp, struct file *filp) { struct binder_proc *proc; binder_debug(BINDER_DEBUG_OPEN_CLOSE, "binder_open: %d:%d\n", current->group_leader->pid, current->pid); // 为binder_proc分配内存kernel内存空间 proc = kzalloc(sizeof(*proc), GFP_KERNEL); if (proc == NULL) return -ENOMEM; // 将当前进程的task保存到binder进程的task中 get_task_struct(current); proc->tsk = current; // 初始化todo队列 INIT_LIST_HEAD(&proc->todo); // 初始化wait队列 init_waitqueue_head(&proc->wait); // 将当前进程的nice值设置为进程优先级 proc->default_priority = task_nice(current); #ifdef RT_PRIO_INHERIT proc->default_rt_prio = current->rt_priority; proc->default_policy = current->policy; #endif binder_lock(__func__); // BINDER_PROC对象创建数加1 binder_stats_created(BINDER_STAT_PROC); // 将proc_node添加到binder_procs的表头队列中 hlist_add_head(&proc->proc_node, &binder_procs); proc->pid = current->group_leader->pid; // 初始化死亡通知队列 INIT_LIST_HEAD(&proc->delivered_death); // 将file文件的privete_data指针指向刚刚创建的binder_proc结构 filp->private_data = proc; binder_unlock(__func__); if (binder_debugfs_dir_entry_proc) { char strbuf[11]; snprintf(strbuf, sizeof(strbuf), "%u", proc->pid); proc->debugfs_entry = debugfs_create_file(strbuf, S_IRUGO, binder_debugfs_dir_entry_proc, proc, &binder_proc_fops); } return 0; } |

这个函数的主要作用就是创建一个struct binder_proc数据结构来保存打开设备文件/dev/binder的进程上下文信息,并且将这个进程上下文信息保存到打开文件结构struct file的私有成员变量privet_data中,这样,在执行其他的操作的时候,就能够通过打开struct file来获取这个进程上下文的信息。这个进行上下文的信息还会同步保存到一个全局哈希表binder_procs中,供程序的内部使用。

binder_mmap函数

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 |

static int binder_mmap(struct file *filp, struct vm_area_struct *vma) { int ret; // 内核虚拟空间 struct vm_struct *area; struct binder_proc *proc = filp->private_data; const char *failure_string; struct binder_buffer *buffer; if (proc->tsk != current) return -EINVAL; // 保证映射内存的大小不超过4M if ((vma->vm_end - vma->vm_start) > SZ_4M) vma->vm_end = vma->vm_start + SZ_4M; ………. if (vma->vm_flags & FORBIDDEN_MMAP_FLAGS) { ret = -EPERM; failure_string = "bad vm_flags"; goto err_bad_arg; } vma->vm_flags = (vma->vm_flags | VM_DONTCOPY) & ~VM_MAYWRITE; // 同步锁 mutex_lock(&binder_mmap_lock); if (proc->buffer) { ret = -EBUSY; failure_string = "already mapped"; goto err_already_mapped; } // 分配一个连续的内核虚拟空间,与进程虚拟空间大小一致 area = get_vm_area(vma->vm_end - vma->vm_start, VM_IOREMAP); if (area == NULL) { ret = -ENOMEM; failure_string = "get_vm_area"; goto err_get_vm_area_failed; } // 指向内核虚拟空间地址的开始处 proc->buffer = area->addr; // 计算地址偏移量 proc->user_buffer_offset = vma->vm_start - (uintptr_t) proc->buffer; mutex_unlock(&binder_mmap_lock); …….. // 计算并分配物理页的指针数组,大小等于用户虚拟地址内存/4k proc->pages = kzalloc(sizeof(proc->pages[0]) * ((vma->vm_end - vma->vm_start) / PAGE_SIZE), GFP_KERNEL); if (proc->pages == NULL) { ret = -ENOMEM; failure_string = "alloc page array"; goto err_alloc_pages_failed; } proc->buffer_size = vma->vm_end - vma->vm_start; vma->vm_ops = &binder_vm_ops; vma->vm_private_data = proc; // 分配物理页面,同时映射到物理空间和进程空间,目前只分配了一个page的物理页 if (binder_update_page_range(proc, 1, proc->buffer, proc->buffer + PAGE_SIZE, vma)) { ret = -ENOMEM; failure_string = "alloc small buf"; goto err_alloc_small_buf_failed; } // binder_buffer对象,指向proc的buffer地址 buffer = proc->buffer; // 创建进行的buffers链表 INIT_LIST_HEAD(&proc->buffers); // 将binder_buffer地址加入所属进程的buffers队列中 list_add(&buffer->entry, &proc->buffers); buffer->free = 1; // 将空闲buffer键入proc->free_buffers中 binder_insert_free_buffer(proc, buffer); // 异步可使用空间大小为buffer总的一半 proc->free_async_space = proc->buffer_size / 2; barrier(); proc->files = get_files_struct(current); proc->vma = vma; proc->vma_vm_mm = vma->vm_mm; /*pr_info("binder_mmap: %d %lx-%lx maps %pK\n", proc->pid, vma->vm_start, vma->vm_end, proc->buffer); */ return 0; err_alloc_small_buf_failed: kfree(proc->pages); proc->pages = NULL; err_alloc_pages_failed: mutex_lock(&binder_mmap_lock); vfree(proc->buffer); proc->buffer = NULL; err_get_vm_area_failed: err_already_mapped: mutex_unlock(&binder_mmap_lock); err_bad_arg: pr_err("binder_mmap: %d %lx-%lx %s failed %d\n", proc->pid, vma->vm_start, vma->vm_end, failure_string, ret); return ret; } |

对于内存映射、vm_struct、vm_area_struct的相关内容大家可以看我写的另一篇博客(https://blog4jimmy.com/2018/01/348.html),看完之后应该会对理解binder_mmap的代码有所帮助的。接下来看一下binder_update_page_range这个函数的内容。

binder_update_page_range函数

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 |

static int binder_update_page_range(struct binder_proc *proc, int allocate, void *start, void *end, struct vm_area_struct *vma) { void *page_addr; unsigned long user_page_addr; struct vm_struct tmp_area; struct page **page; // 每个进程都有自己的mm_struct,这使得每个进程都有自己独立的虚拟地址空间 struct mm_struct *mm; binder_debug(BINDER_DEBUG_BUFFER_ALLOC, "%d: %s pages %pK-%pK\n", proc->pid, allocate ? "allocate" : "free", start, end); if (end <= start) return 0; trace_binder_update_page_range(proc, allocate, start, end); // binder_mmap函数中传进来的vma不为空,其他情况为空 if (vma) mm = NULL; else mm = get_task_mm(proc->tsk); if (mm) { down_write(&mm->mmap_sem); vma = proc->vma; if (vma && mm != proc->vma_vm_mm) { pr_err("%d: vma mm and task mm mismatch\n", proc->pid); vma = NULL; } } // binder_mmap中传进来的allocate为1 if (allocate == 0) goto free_range; if (vma == NULL) { pr_err ("%d: binder_alloc_buf failed to map pages in userspace, no vma\n", proc->pid); goto err_no_vma; } // for循环中进行物理内存分配及映射操作,这里只分配一个页 for (page_addr = start; page_addr < end; page_addr += PAGE_SIZE) { int ret; page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE]; BUG_ON(*page); // 分配一个page的物理页 *page = alloc_page(GFP_KERNEL | __GFP_HIGHMEM | __GFP_ZERO); if (*page == NULL) { pr_err("%d: binder_alloc_buf failed for page at %pK\n", proc->pid, page_addr); goto err_alloc_page_failed; } tmp_area.addr = page_addr; tmp_area.size = PAGE_SIZE + PAGE_SIZE /* guard page? */; // 将物理内存空间映射到内核虚拟空间 ret = map_vm_area(&tmp_area, PAGE_KERNEL, page); if (ret) { pr_err ("%d: binder_alloc_buf failed to map page at %pK in kernel\n", proc->pid, page_addr); goto err_map_kernel_failed; } // 将物理内存空间映射到进程虚拟空间 user_page_addr = (uintptr_t) page_addr + proc->user_buffer_offset; ret = vm_insert_page(vma, user_page_addr, page[0]); if (ret) { pr_err ("%d: binder_alloc_buf failed to map page at %lx in userspace\n", proc->pid, user_page_addr); goto err_vm_insert_page_failed; } /* vm_insert_page does not seem to increment the refcount */ } if (mm) { up_write(&mm->mmap_sem); mmput(mm); } return 0; free_range: for (page_addr = end - PAGE_SIZE; page_addr >= start; page_addr -= PAGE_SIZE) { page = &proc->pages[(page_addr - proc->buffer) / PAGE_SIZE]; if (vma) zap_page_range(vma, (uintptr_t) page_addr + proc->user_buffer_offset, PAGE_SIZE, NULL); err_vm_insert_page_failed: unmap_kernel_range((unsigned long)page_addr, PAGE_SIZE); err_map_kernel_failed: __free_page(*page); *page = NULL; #ifdef MTK_BINDER_PAGE_USED_RECORD if (binder_page_used > 0) binder_page_used--; if (proc->page_used > 0) proc->page_used--; #endif err_alloc_page_failed: ; } err_no_vma: if (mm) { up_write(&mm->mmap_sem); mmput(mm); } return -ENOMEM; } |

这里主要做的工作主要流程是在:

binder_mmap函数内执行get_vm_area()函数分配内核虚拟空间,然后调用到binder_update_page_range函数,在这个函数里面调用alloc_page()函数进行物理内存的分配,然后调用map_vm_area()函数将物理内存映射到内核虚拟空间,之后再调用vm_insert_page()函数将物理页面映射到虚拟用户空间。

biner_ioctl函数

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 |

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg) { int ret; struct binder_proc *proc = filp->private_data; struct binder_thread *thread; unsigned int size = _IOC_SIZE(cmd); void __user *ubuf = (void __user *)arg; /*pr_info("binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg); */ trace_binder_ioctl(cmd, arg); // 进入休眠,等待中断的到来 ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2); if (ret) goto err_unlocked; binder_lock(__func__); // 获取工作线程 thread = binder_get_thread(proc); if (thread == NULL) { ret = -ENOMEM; goto err; } switch (cmd) { // 进行binder的读写操作 case BINDER_WRITE_READ: ret = binder_ioctl_write_read(filp, cmd, arg, thread); if (ret) goto err; break; // 设置binder的最大线程数 case BINDER_SET_MAX_THREADS: if (copy_from_user(&proc->max_threads, ubuf, sizeof(proc->max_threads))) { ret = -EINVAL; goto err; } break; // 设置binder的上下文管理者,即注册ServiceManager为binder的上下文管理者 case BINDER_SET_CONTEXT_MGR: ret = binder_ioctl_set_ctx_mgr(filp, thread); if (ret) goto err; break; // binder线程退出,进行资源释放 case BINDER_THREAD_EXIT: binder_debug(BINDER_DEBUG_THREADS, "%d:%d exit\n", proc->pid, thread->pid); binder_free_thread(proc, thread); thread = NULL; break; // 获取binder的版本号 case BINDER_VERSION:{ struct binder_version __user *ver = ubuf; if (size != sizeof(struct binder_version)) { ret = -EINVAL; goto err; } if (put_user(BINDER_CURRENT_PROTOCOL_VERSION, &ver->protocol_version)) { ret = -EINVAL; goto err; } break; } default: ret = -EINVAL; goto err; } ret = 0; err: if (thread) thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN; binder_unlock(__func__); wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2); if (ret && ret != -ERESTARTSYS) pr_info("%d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret); err_unlocked: trace_binder_ioctl_done(ret); return ret; } |

binder_ioctl中包含的函数大部分都比较简单,这里我们只看看用的最多的binder_ioctl_write_read函数,至于binder_ioctl_set_ctx_mgr函数会在serviceManager部分再细讲。

binder_ioctl_write_read函数

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 |

static int binder_ioctl_write_read(struct file *filp, unsigned int cmd, unsigned long arg, struct binder_thread *thread) { int ret = 0; struct binder_proc *proc = filp->private_data; unsigned int size = _IOC_SIZE(cmd); void __user *ubuf = (void __user *)arg; struct binder_write_read bwr; if (size != sizeof(struct binder_write_read)) { ret = -EINVAL; goto out; } // 将用户空间的数据拷贝到binder_write_read结构体bwr中 if (copy_from_user(&bwr, ubuf, sizeof(bwr))) { ret = -EFAULT; goto out; } binder_debug(BINDER_DEBUG_READ_WRITE, "%d:%d write %lld at %016llx, read %lld at %016llx\n", proc->pid, thread->pid, (u64) bwr.write_size, (u64) bwr.write_buffer, (u64) bwr.read_size, (u64) bwr.read_buffer); // 当写缓存中还有数据的时候,执行binder写操作 if (bwr.write_size > 0) { ret = binder_thread_write(proc, thread, bwr.write_buffer, bwr.write_size, &bwr.write_consumed); trace_binder_write_done(ret); if (ret < 0) { bwr.read_consumed = 0; if (copy_to_user(ubuf, &bwr, sizeof(bwr))) ret = -EFAULT; goto out; } } // 当读缓存中还有数据的时候,执行binder读操作 if (bwr.read_size > 0) { ret = binder_thread_read(proc, thread, bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK); trace_binder_read_done(ret); if (!list_empty(&proc->todo)) { if (thread->proc != proc) { int i; unsigned int *p; pr_debug("binder: " "thread->proc != proc\n");binder_get_thread p = (unsigned int *)thread - 32; for (i = -4; i <= 3; i++, p += 8) { pr_debug("%p %08x %08x %08x %08x %08x %08x %08x %08x\n", p, *(p), *(p + 1), *(p + 2), *(p + 3), *(p + 4), *(p + 5), *(p + 6), *(p + 7)); } pr_debug("binder: thread->proc " "%p\n", thread->proc); p = (unsigned int *)thread->proc - 32; for (i = -4; i <= 5; i++, p += 8) { pr_debug("%p %08x %08x %08x %08x %08x %08x %08x %08x\n", p, *(p), *(p + 1), *(p + 2), *(p + 3), *(p + 4), *(p + 5), *(p + 6), *(p + 7)); } pr_debug("binder: proc %p\n", proc); p = (unsigned int *)proc - 32; for (i = -4; i <= 5; i++, p += 8) { pr_debug("%p %08x %08x %08x %08x %08x %08x %08x %08x\n", p, *(p), *(p + 1), *(p + 2), *(p + 3), *(p + 4), *(p + 5), *(p + 6), *(p + 7)); } BUG(); } // 唤醒等待状态的线程 wake_up_interruptible(&proc->wait); } if (ret < 0) { // 当binder读取失败时,将bwr的内容回写到用户空间,并返回 if (copy_to_user(ubuf, &bwr, sizeof(bwr))) ret = -EFAULT; goto out; } } binder_debug(BINDER_DEBUG_READ_WRITE, "%d:%d wrote %lld of %lld, read return %lld of %lld\n", proc->pid, thread->pid, (u64) bwr.write_consumed, (u64) bwr.write_size, (u64) bwr.read_consumed, (u64) bwr.read_size); // binder读写操作完成后,将bwr的内容返回给用户空间 if (copy_to_user(ubuf, &bwr, sizeof(bwr))) { ret = -EFAULT; goto out; } out: return ret; } |

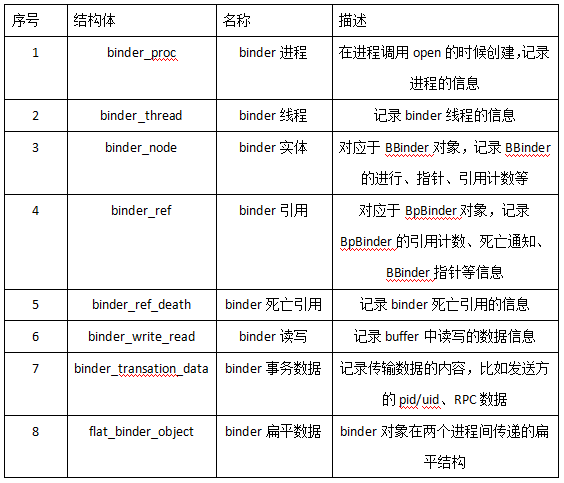

binder中各种结构体的信息

声明,这部分博客整理自:http://gityuan.com/2015/11/01/binder-driver/

不得不说这一部分的内容比较无聊,实际可以先不看,到时候用到再回来查吧,我也是结合别人的博客一个一个结构体看的

binder中结构体列表

binder_proc结构体

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 |

struct binder_proc { // 进程节点 struct hlist_node proc_node; // binder_thread红黑树根节点 struct rb_root threads; // binder_node红黑树根节点 struct rb_root nodes; // binder_ref红黑树根节点(handle为key) struct rb_root refs_by_desc; // binder_ref红黑树根节点(ptr为key) struct rb_root refs_by_node; // 进程pid int pid; // 指向进程的虚拟地址空间的指针 struct vm_area_struct *vma; // 指向进程的内存管理结构体指针 struct mm_struct *vma_vm_mm; // 指向进程task结构体的指针 struct task_struct *tsk; // 指向进程文件结构体指针 struct files_struct *files; struct hlist_node deferred_work_node; int deferred_work; // 内核空间的起始地址 void *buffer; // 用户空间和内核空间的地址偏移量 ptrdiff_t user_buffer_offset; // 所有buffer struct list_head buffers; // 空闲buffer struct rb_root free_buffers; // 已分配的buffer struct rb_root allocated_buffers; // 异步的可用空闲空间大小 size_t free_async_space; // 指向物理内存页的指针的指针 struct page **pages; // 映射的内核空间大小 size_t buffer_size; // 可用的内存总大小 uint32_t buffer_free; // 工作队列 struct list_head todo; // 等待队列 wait_queue_head_t wait; // binder的统计信息 struct binder_stats stats; // 已分发的死亡通知 struct list_head delivered_death; // 最大支持线程数 int max_threads; // 请求的线程数 int requested_threads; // 请求启动的线程数 int requested_threads_started; // 准备好的线程数 int ready_threads; // 默认的优先级 long default_priority; // debugfs入口 struct dentry *debugfs_entry; #ifdef RT_PRIO_INHERIT unsigned long default_rt_prio:16; unsigned long default_policy:16; #endif #ifdef BINDER_MONITOR struct binder_buffer *large_buffer; #endif }; |

binder_thread结构体

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 |

struct binder_node { // 调试用ID int debug_id; // binder工作类型 struct binder_work work; union { // binder节点正常使用 struct rb_node rb_node; // binder节点已死亡 struct hlist_node dead_node; }; // binder所在线程 struct binder_proc *proc; // 所有指向该节点的binder引用队列 struct hlist_head refs; // 内部强引用计数 int internal_strong_refs; // 本地弱引用计数 int local_weak_refs; // 本地强引用结束 int local_strong_refs; // 指向用户空间的binder_node指针 binder_uintptr_t ptr; // 附加数据 binder_uintptr_t cookie; unsigned has_strong_ref:1; unsigned pending_strong_ref:1; unsigned has_weak_ref:1; unsigned pending_weak_ref:1; unsigned has_async_transaction:1; unsigned accept_fds:1; unsigned min_priority:8; struct list_head async_todo; #ifdef BINDER_MONITOR char name[MAX_SERVICE_NAME_LEN]; #endif }; |

binder_ref结构体

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

struct binder_ref { /* Lookups needed: */ /* node + proc => ref (transaction) */ /* desc + proc => ref (transaction, inc/dec ref) */ /* node => refs + procs (proc exit) */ // 调试用ID int debug_id; // 以desc为索引的红黑树的头结点 struct rb_node rb_node_desc; // 以node为节点的 struct rb_node rb_node_node; struct hlist_node node_entry; // binder进程 struct binder_proc *proc; // binder节点 struct binder_node *node; // handle uint32_t desc; // 强引用计数 int strong; // 弱引用计数 int weak; // 死亡通知 struct binder_ref_death *death; }; |

binder_ref_death结构体

|

1 2 3 4 5 6 |

struct binder_ref_death { // binder工作类型 struct binder_work work; // 指向死亡通知的BpBinder代理对象的指针 binder_uintptr_t cookie; }; |

binder_write_read结构体

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

struct binder_write_read { // write_buffer的总字节数 binder_size_t write_size; /* bytes to write */ // write_buffer中已处理的字节数 binder_size_t write_consumed; /* bytes consumed by driver */ // 指向write_buffer的指针 binder_uintptr_t write_buffer; // read_buffer的总字节数 binder_size_t read_size; /* bytes to read */ // read_buffer中已处理的字节数 binder_size_t read_consumed; /* bytes consumed by driver */ // 指向read_buffer的指针 binder_uintptr_t read_buffer; }; |

write_buffer和read_buffer都是包含Binder协议命令的binder_transaction_data结构体。

binder_transaction_data结构体

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

struct binder_transaction_data { /* The first two are only used for bcTRANSACTION and brTRANSACTION, * identifying the target and contents of the transaction. */ union { /* target descriptor of command transaction */ // binder_ref即handle __u32 handle; /* target descriptor of return transaction */ // binder_node的内存地址 binder_uintptr_t ptr; } target; // BBinder指针 binder_uintptr_t cookie; /* target object cookie */ // RPC代码,代表client和server之间约定的命令码 __u32 code; /* transaction command */ /* General information about the transaction. */ // 标志位 __u32 flags; // 发送方的pid pid_t sender_pid; // 发送方的uid uid_t sender_euid; // 数据量的字节数 binder_size_t data_size; /* number of bytes of data */ // IPC对象的大小 binder_size_t offsets_size; /* number of bytes of offsets */ /* If this transaction is inline, the data immediately * follows here; otherwise, it ends with a pointer to * the data buffer. */ union { struct { /* transaction data */ // 数据区的起始位置 binder_uintptr_t buffer; /* offsets from buffer to flat_binder_object structs */ // 数据区IPC对象的偏移量 binder_uintptr_t offsets; } ptr; __u8 buf[8]; } data; }; |

- target: 对于BpBinder则使用handle,对于BBinder则使用ptr,故使用union数据类型来表示;

- code: 比如注册服务过程code为ADD_SERVICE_TRANSACTION,又比如获取服务code为CHECK_SERVICE_TRANSACTION

- data:代表整个数据区,其中data.ptr指向的是传递给Binder驱动的数据区的起始地址,data.offsets指的是数据区中IPC数据地址的偏移量。

- cookie: 记录着BBinder指针。

- data_size:代表本次传输的parcel数据的大小;

- offsets_size: 代表传递的IPC对象的大小;根据这个可以推测出传递了多少个binder对象。

对于64位IPC,一个IPC对象大小等于8;对于32位IPC,一个IPC对象大小等于4;

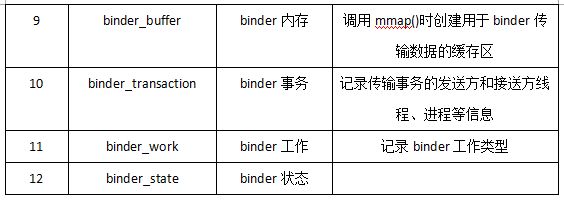

flat_binder_object结构体

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

/* * This is the flattened representation of a Binder object for transfer * between processes. The 'offsets' supplied as part of a binder transaction * contains offsets into the data where these structures occur. The Binder * driver takes care of re-writing the structure type and data as it moves * between processes. */ // flat_binder_object是binder对象的扁平结构,用在两个进程将的传递过程中。 struct flat_binder_object { /* 8 bytes for large_flat_header. */ // 类型 __u32 type; // 记录优先级、文件描述符许可 __u32 flags; /* 8 bytes of data. */ union { // (union)当传递的是binder_node时使用,指向binder_node在应用程序的地址 binder_uintptr_t binder; /* local object */ // (union)当传递的是binder_ref时使用,存放binder在进程中的引用号 __u32 handle; /* remote object */ }; /* extra data associated with local object */ // 只对binder_node有效,存放binder_node的额外数据 binder_uintptr_t cookie; }; |

这里的类型type的可能取值来自enum,成员如下:

说明:

- 当type等于BINDER_TYPE_BINDER或BINDER_TYPE_WEAK_BINDER类型时, 代表Server进程向ServiceManager进程注册服务,则创建binder_node对象;

- 当type等于BINDER_TYPE_HANDLE或BINDER_TYPE_WEAK_HEANDLE类型3时, 代表Client进程向Server进程请求代理,则创建binder_ref对象;

- 当type等于BINDER_TYPE_FD类型时, 代表进程向另一个进程发送文件描述符,只打开文件,则无需创建任何对象。

binder_buffer对象

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

struct binder_buffer { // 释放或分配的内存地址队列入口 struct list_head entry; /* free and allocated entries by address */ // 释放或分配的内存的大小红黑树头结点 struct rb_node rb_node; /* free entry by size or allocated entry */ /* by address */ // 标记该内存区是否已经释放 unsigned free:1; // 标记该内存区是否允许用户释放 unsigned allow_user_free:1; // 标记该内存区是否允许异步事务 unsigned async_transaction:1; // 调试用ID unsigned debug_id:29; // binder事务的内存地址 struct binder_transaction *transaction; #ifdef BINDER_MONITOR struct binder_transaction_log_entry *log_entry; #endif // 目标binder实体 struct binder_node *target_node; // 数据大小 size_t data_size; // 数据偏移量 size_t offsets_size; // 数据数组 uint8_t data[0]; }; |

binder_transaction结构体

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

struct binder_transaction { // 调试用ID int debug_id; // binder工作类型 struct binder_work work; // 发送端的线程 struct binder_thread *from; // 发送端上一个binder事务 struct binder_transaction *from_parent; // 接收端的进程 struct binder_proc *to_proc; // 接收端的线程 struct binder_thread *to_thread; // 接收端的下一个事务 struct binder_transaction *to_parent; // 是否需要答复 unsigned need_reply:1; /* unsigned is_dead:1; *//* not used at the moment */ // 数据buffer struct binder_buffer *buffer; // 通信方法 unsigned int code; // 标志 unsigned int flags; // 优先级 long priority; // 保存的优先级 long saved_priority; // 发送端的uid kuid_t sender_euid; #ifdef RT_PRIO_INHERIT unsigned long rt_prio:16; unsigned long policy:16; unsigned long saved_rt_prio:16; unsigned long saved_policy:16; #endif }; |

binder_work结构体

|

1 2 3 4 5 6 7 8 9 10 11 |

struct binder_work { struct list_head entry; enum { BINDER_WORK_TRANSACTION = 1, BINDER_WORK_TRANSACTION_COMPLETE, BINDER_WORK_NODE, BINDER_WORK_DEAD_BINDER, BINDER_WORK_DEAD_BINDER_AND_CLEAR, BINDER_WORK_CLEAR_DEATH_NOTIFICATION, } type; }; |